Design a CRISPR/Cas experiment with gRNA and HiPlex panels (potato)

Activate your virtual environment

source .venv/bin/activate

Create the reference sequences

Define the path to the download directory:

download_dir=$/PATH/TO/DOWNLOAD_DIR/

Or, create a new download dir:

cd ../

mkdir download

cd download

download_dir=$(pwd)

Download genomes and gene annotation data:

cd $download_dir

mkdir originals

cd originals

wget https://ftp.psb.ugent.be/pub/plaza/plaza_public_dicots_05/GeneFamilies/genefamily_data.HOMFAM.csv.gz

wget https://ftp.psb.ugent.be/pub/plaza/plaza_public_dicots_05/GeneFamilies/genefamily_data.ORTHOFAM.csv.gz

wget https://ftp.psb.ugent.be/pub/plaza/plaza_public_dicots_05/Genomes/stu.fasta.gz

wget https://ftp.psb.ugent.be/pub/plaza/plaza_public_dicots_05/GFF/stu/annotation.selected_transcript.all_features.stu.gff3.gz

wget https://ftp.psb.ugent.be/pub/plaza/plaza_public_dicots_05/GFF/stu/annotation.selected_transcript.exon_features.stu.gff3.gz

wget https://ftp.psb.ugent.be/pub/plaza/plaza_public_dicots_05/Annotation/annotation.selected_transcript.stu.csv.gz

wget https://ftp.psb.ugent.be/pub/plaza/plaza_public_dicots_05/IdConversion/id_conversion.stu.csv.gz

wget https://ftp.psb.ugent.be/pub/plaza/plaza_public_dicots_05/Descriptions/gene_description.stu.csv.gz

wget https://ftp.psb.ugent.be/pub/plaza/plaza_public_dicots_05/GO/go.stu.csv.gz

wget https://ftp.psb.ugent.be/pub/plaza/plaza_public_dicots_05/InterPro/interpro.stu.csv.gz

wget https://ftp.psb.ugent.be/pub/plaza/plaza_public_dicots_05/MapMan/mapman.stu.csv.gz

wget https://ftp.psb.ugent.be/pub/plaza/plaza_public_dicots_05/Fasta/proteome.selected_transcript.stu.fasta.gz

wget https://ftp.psb.ugent.be/pub/plaza/plaza_public_dicots_05/Fasta/transcripts.selected_transcript.stu.fasta.gz

wget https://ftp.psb.ugent.be/pub/plaza/plaza_public_dicots_05/Fasta/cds.selected_transcript.stu.fasta.gz

Unzip and change some formats for SMAP target-selection and place in SMAP_reference directory:

mkdir ../../SMAP_reference

zcat genefamily_data.HOMFAM.csv.gz | grep -v "#" > ../../SMAP_reference/genefamily_data.HOMFAM.csv

zcat genefamily_data.ORTHOFAM.csv.gz | grep -v "#" > ../../SMAP_reference/genefamily_data.ORTHOFAM.csv

zcat stu.fasta.gz > ../../SMAP_reference/stu.fasta

zcat annotation.selected_transcript.stu.csv.gz > ../../SMAP_reference/annotation.selected_transcript.stu.csv

zcat annotation.selected_transcript.all_features.stu.gff3.gz > ../../SMAP_reference/annotation.selected_transcript.all_features.stu.gff3

These files may be used later:

zcat annotation.selected_transcript.all_features.stu.gff3.gz > ../../SMAP_reference/annotation.selected_transcript.all_features.stu.gff3

zcat annotation.selected_transcript.exon_features.stu.gff3.gz > ../../SMAP_reference/annotation.selected_transcript.exon_features.stu.gff3

zcat gene_description.stu.csv.gz > ../../SMAP_reference/gene_description.stu.csv

zcat go.stu.csv.gz > ../../SMAP_reference/go.stu.csv

zcat interpro.stu.csv.gz > ../../SMAP_reference/interpro.stu.csv

zcat mapman.stu.csv.gz > ../../SMAP_reference/mapman.stu.csv

zcat proteome.selected_transcript.stu.fasta.gz > ../../SMAP_reference/proteome.selected_transcript.stu.fasta

zcat transcripts.selected_transcript.stu.fasta.gz > ../../SMAP_reference/transcripts.selected_transcript.stu.fasta

zcat cds.selected_transcript.stu.fasta.gz > ../../SMAP_reference/cds.selected_transcript.stu.fasta

Create short_list of homology groups in the SMAP_reference directory:

cd ../../SMAP_reference

echo "HOM05D000431" > shortlist_HOM.tsv

Add as many different homology groups as you like:

echo "HOM05D000432" >> shortlist_HOM.tsv

Create fasta reference files per homology group with SMAP target-selection (use ./ notation to indicate the path to this directory, otherwise the script tries to write files to the root of the system, where is has no write access rights).:

python3 ../smap/utilities/SMAP_target-selection.py ./annotation.selected_transcript.all_features.stu.gff3 ./stu.fasta ./genefamily_data.HOMFAM.csv stu --region 1000 --hom_groups ./shortlist_HOM.tsv

Troubleshooting

In some cases the GFF file contains formatting errors in a subset of the genes, that cause problems when processing the GFF file with gffutils. In this case a quick workaround is to try to extract the gff features from the candidate genes only, and use that GFF file as input for SMAP target-selection.

Create a short_list of candidate genes for the list of selected homology groups of the species of interest (the corresponding gene_IDs are listed in the third column of the file genefamily_data.HOMFAM.csv)

grep -f shortlist_HOM.tsv ./genefamily_data.HOMFAM.csv | grep "stu" | cut -f3 > genelist_HOM05D000431_HOM05D000432.txt

Extract the gff features for each of the candidate genes from the original GFF file

grep -f genelist_HOM05D000431_HOM05D000432.txt ./annotation.selected_transcript.all_features.stu.gff3 > annotation.selected_transcript.all_features.HOM05D000431_HOM05D000432.stu.gff3

Run SMAP target selection again, using the short GFF file

python3 ../smap/utilities/SMAP_target-selection.py ./annotation.selected_transcript.all_features.HOM05D000431_HOM05D000432.stu.gff3 ./stu.fasta ./genefamily_data.HOMFAM.csv stu --region 1000 --hom_groups ./shortlist_HOM.tsv

Output

SMAP target-selection will report for how many homology groups it was not possible to extract any sequence of the given species.

By default, SMAP target-selection provides several types of output.

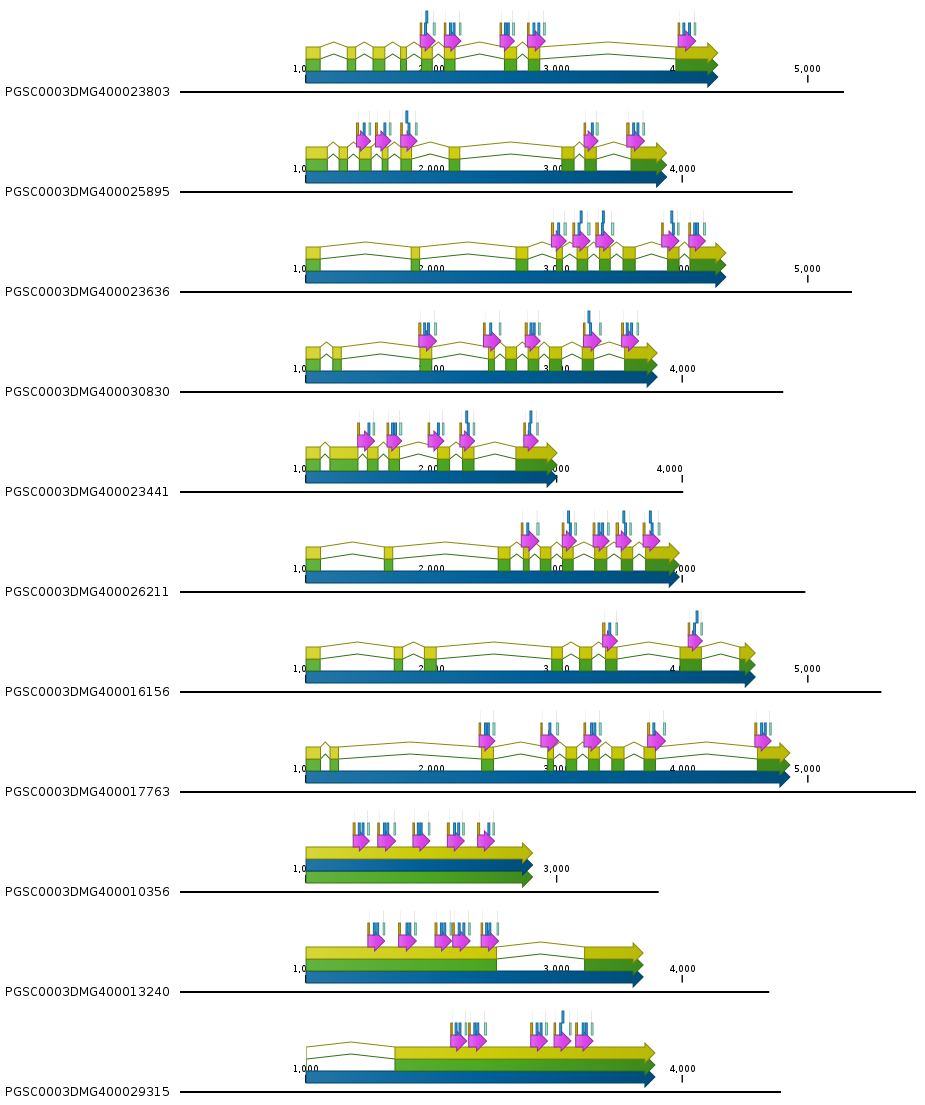

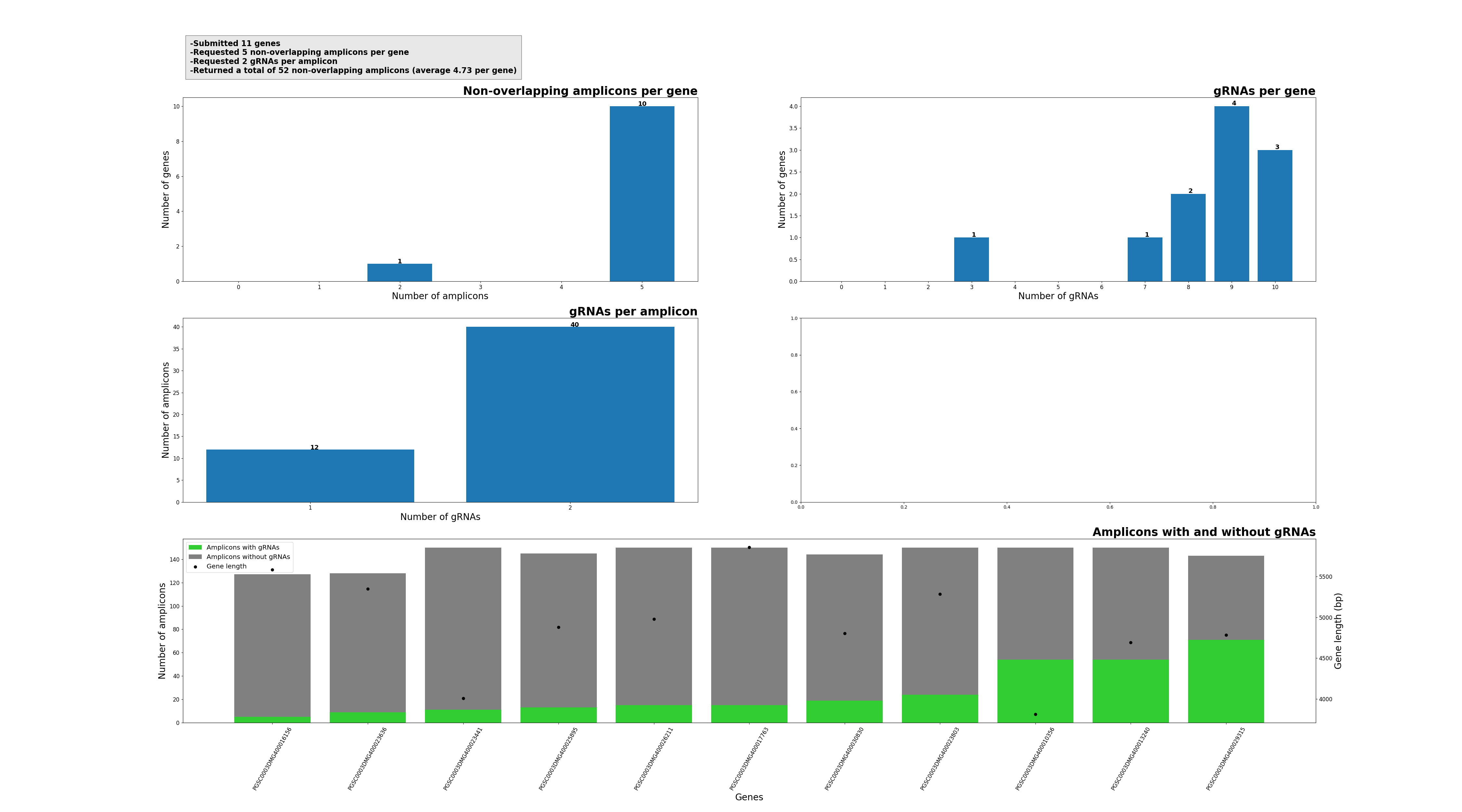

SMAP target-selection creates separate files per homology group.1. A FASTA file with all extracted gene sequences encoded on the positive strand.#. A GFF file containing the selected gene, exon, mRNA and CDS features.These output files can immediately be viewed by any genome browser, to check the integrity of the gene models prior to any amplicon or gRNA design. Example of the FASTA file.

Example of the FASTA file.

>PGSC0003DMG400023803

ACTTGTTGCCACCGCTTCACTCATTTTGACCCGAATCCACCGACAAGGAAAATGTTTTCAATTATTTTTTAAAGAAAACATTTTCTTGTAAAATAAGTTTTTATCTTATTTTCTAGTAATTTCGTAAGCAAGTCTTTTGATCGATGAAACATAACAAAATTAAAAAAATATTTTTTCTCCATACCTAACACAAACGTTGAAATTCGCCACATATTTAAAGGATATTATAATAAATAAATTATTATTACTATCAAATAAACGAATTAATTAGGAAAAATAAAAAGTAAAATAATTTTCTACTACTATAAATAGGGAAGAGATACTTTCATAATGGAATGTAAGACTTTTAACGCATGAGAGCTTTCAATAAAAAAGAAATATTCTTCATTCCTTTTTTTTCACTTTTTTTCTCTTCACCAATTCACACTTTGTCCCTATTACTCCCTAGAATCTCTCGCTCTGTCTCATGCACACACAGACACACACTAGAGGATTCCTTGCTTTAGCTTCAAATCTCTGCTAATAATCTTGATTTCATTTTTCTCTCCTTTTCTCCCACCATAACCCCATATCAATTTTGTTCTACCCATCTTCAAAGGCCCACAATTTCCCAATCTTTGTGCTACAAGAAACCCTAGTTCTTCTAAAATTCACAGATTCTCAAATACCCTTTTCCTGATTTGGTGAATATATGTTGATTTTGGTTCATATTACGTTGTAATTCAGCTTAAAGGTGTTGAATTCAGGTTGGTTTTGGAGTTTGGATTTTGGGATTTTATGGGTATTTTGTAAATTGCTTTAAAGATTGATCTTTTTTTTTGTTAAATTGAAAACCCCCTCATTCCCAATTTTGCATCTTGCAGCAGAATTTGGGTTTGATTGTTGTTTCAAAGGGGAGAAAGTTTGAAGCTCAAGTTTTGGTGGATTTTGTGGTTTTGAATTGAAGGGATTTTTTTTTGTTTTTTTTTATATGTAGTGAAGTTTATTTAAGGATAAGAAGATGGAGAAATACGAGCTTGTGAAGGATATAGGGTCTGGGAATTTTGGTGTTGCAAGGCTCATGAGGAACAAGGAGACCAAAGAACTCGTGGCAATGAAATACATTGAGAGAGGACACAAGGTTTGCATAATTACTGTCTTCTATCATCAATCTAACATCTTCCAATCTTTTTAAATATCTGATGCTCATTGTTTTCGTTATTGTTAGTGTGTTTTTTCCCTCTTAATTATCAGTTAAACATTTTTTAATCAACCAGGTCCCCTGCTCTCTTTTTTATATGCAATATCATATAAGCTTCCTTAGATTTGAGATGCCACTTCTGTTGTAGATTGATGAGAATGTAGCAAGGGAGATCATTAATCATAAATCACTTCGACATCCAAACATAATTCGCTTCAAGGAGGCAAGTGTGTTCATATCATTTACTATTTCGCAATGCCCTATTGCTTTCATGGTGTTTTCAATTGAAAATGGAAAGGAAAGTTGATGAATAACTGGCCTAAACTGTTGTTCATTTGTTTTGTGAGATGCAGGTGGTATTGACTCCCACTCATCTTGCCATTGTTATGGAATATGCAGCTGGGGGAGAACTGTTTGAGCGCATTTGCAATGCAGGAAGGTTCAGTGAAGATGAGGTATAGTTAGAATTTCTAAATTCTGTAATTTTAGAAGGCCACACTTATGGGATCTTAAGAGCCTTGATTCTCTATCTTTAATGCCTCTCTCATTTTGTTCTTGCTTCTGTAATTTTAGGCCAGATACTTTTTCCAGCAGCTTATTTCAGGTGTCCACTACTGTCACAACATGGTGAGCAATATACAAGAATCTACAGAAAATTATTCATTACTGTGAATATATAAAATGCCATCTTTTTAATATAAGGGAGCTTAGTCCTCTTCAAATTTCCATTGCCTCTGCAGCAAATATGTCATAGAGACCTGAAGCTGGAGAATACCCTTCTTGATGGAAGTGCAGCTCCACGCTTGAAGATATGTGATTTTGGATACTCAAAGGTTGATATGTTCTGCTGAATGGTAGATATAGACATACTATCCCCATAGGGATCACTAAGATGCTCATAGTTTTCTTCTGCAAGTGCAGTCGTCCCTGTTGCATTCGAGGCCAAAATCAACTGTTGGGACTCCAGCTTATATTGCTCCAGAGGTCCTCTCCAGAAGGGAATATGATGGCAAGGTAGAGTAATTATGTGATTTTTTACATTTGGTGGAAATGCGTTATGCAAATTATTTTTCTCTGGCATCCATCTGTGTGGAGCTGAATTTGCTACCATATGTGTCATTTATTTTTATGGTATATACTACCTTTCCTCTTATGAGTAGTTAAGAAACTACTATAACTGGTCGCTCTATGACTTGTTAAGAAGCCACTATAACTCGCTCTATACTTGTTAAGAAACCACTATAACTGGTTGTTCTATGACTTGTTGAATTACAGATTATGCTTCCATCTTTGTGTAAATTGCATCTTTTTGCTTACACCCTCCCCTTCTTTCCTAAAGGTAGAGTTTTTTTACTGATCTTTATTCTTAGGCCGTGAACGTTTGCTTGTGATTATTTCAGCTGGCTGATGTCTGGTCATGTGGAGTGACACTTTACGTGATGCTGGTTGGGGCATACCCTTTTGAAGACCAGGAGGATCCAAAGAACTTTAGGAAAACTATTCAAGTGAGTATTTTCAAGTCTTCATATATCCGACGAACTTCATGCGATGCTTACTCCTTTTAATTTGGCTTTGATGTTTCTCTTCCAGCGAATAATGGCGGTACAGTACAAGATTCCTGACTATGTTCACATATCGCAAGATTGTAGGCACCTTCTCTCTCGCATATTTGTTGCCAATTCCGCAAGGGTATGCTCTCTTCTATCTTTATTGGGTGTATTCTAGTGGAAGTTTGCTTGAATTTTCAATGGGCTAACAATCTGATAGAGATGTTTTTTTTGATGAAGAACAATCTGATAGAGATGTTATGTGTTTAATGCATCATTTACTGTTACACAATGGGGTCTATGCATATGGCGTTGTGGTGCCATTTTCATCCATGCACATTTGACAGATTATCCTCCAATTAGGTCATCAGTGTTACCATATACTGTATATTTGAGATCGCCCTTATCTGAGCCAAAGAAAACTATGATATGTTGGATAATTACGTCAACCTTCCAACACCCTTTTTTACTTGATCTGAGCGTCATCCTTGGTCCCAGTAGTAACCCCTGCACTCTAGGAGTGAGTCGGTGTTGCTCATTTAGATGAATGCAACTAGATAATCTTATTCCGTGGTTCTTGTGAGGGTACTCTGTTGTTTAATCCTCCAACTAAATTTGAGTTAAATGTCTAAGACTACAACAATTGCTTGTGTCATTAAGTAAGCAGTGTTGTTTAGTCAGTCGGATCTTATTGCACTTGCCTACCCTATTCTTAAACGATCAGTTCTCCCTCCCCAAAAGGATGACGTTAACTTCTAATAGTGTGTCCTCTAAACAAATGGAGTTGGGATAGTTGTTTTTTAAATTCAGTTGCTACTGTATTAATGATTCCTTGTAACTTGGCTTTACGAAATGAGAGAGAATCAGAATCATCCAGAGGGGAAGTTAAGTGATATCTCAATTTGATGGTAATTTATGATTTTGTAATTGTTCCTATAATTGTAATGGCAGTCTGCATTTGCAATCTTACCCTTGAGAAAAATCTGAATCAACGTCTGCTTCGTCATCTCTTCAAACTTTCAACATTATAAAGTGTGTATAGCTCTGGAACATGTCATAAATAAACAACATATGCACTAGTATTAAATTTTTGCTTTCATTTCTCTGCTCCTGGCATTCTTACCATATTGGAGGGGGGAAAAGAAAAAGGAGGAAGTTATAACATATTTTAAGTAGGAGGATATTGTGGCTTGTCTAATAAGCAGGTTTATTTTGCAGAGAATCACAATCAAAGAAATCAAGTCGCACCCATGGTTTTTGAAGAATTTGCCTAGGGAGTTGACAGAAGCAGCACAGGCAGCTTATTATAGAAAAGAAAACCCGACATTTTCGCTTCAGAGTGTGGAGGAGATCATGAAAATTGTGGAAGAGGCAAAGACCCCTCCTCCAGTTTCCCGTTCAGTATCAGGTTTTGGCTGGGGAGGTGAAGAAGAAGAAGAGGAGAAGGAAGGAGATGTAGAAGAAGAAGTAGAGGAGGAGGAGGATGAAGAAGAAGAAGACGAATATGACAAACAAGTGAAACAAGCACACCAAAGCTTAGGGGAAGTTCGTCTCACCTAAGCGTAAATTATCTTGCTTGCTCGCGGAGAACTGTGGACACACATATAGCCTATTATGTCTTTGGCATTTCCTGTTTGTGTAAAAGTTGTTTCACCTGGGCCTACTTTATCCGTTTTGCTTGTAGAAACCTATTGCTATTAAGTGTGGCATTTCCTGGTCGTGTCAAGATTTTCTTTCTTCTGTTGTGTGGAACTTAAAAGAAGTCTGTAAATTTCGAGTTTTGAGGTAAGGAAGCTTGTGAATTATATTTTCTTAAGACAAAGGCATTGGTGTACTGAAGTTGAACCTTAGATGAGCCTGAGTTACAATTAACAGGAAACTTCGGTAAGTTTTGTTGTATGCATCTTAGATATATGCAGAGAATTTGCATACATGGTCCTTATGGTCTTTCAGTCTTATTTGCTTTTTATGGGTTTTGTTATTACTGTTGATGTTTGAACTCGTCAGTTTTTACATACATTGGATGACAAGCATGGTGGATTCAGAAAAGTAAAGGTAACGTGTTTGGGATTTGAACTTGGAACCTTACAATGAGTTTGGGAAAACCTAAACTATTGAGTTAATTTCTAGTCTTGTGTTAACGGGATTCGGAGCATCACTACCTTTGAAGTAAAGGTATAATCTGCGTACATTCTGTTCTGACCTCGCTTTATGGGACTAGTATGTTGTTGTTAATGAAGAATTAAGATGAGAAGATATATAATCCTAATGGTACAGTTTTTAGAGTCCAATTCATAATATATTTTTTCAATGTGGACAATGACAGCAATGATATTAATCAATTTAGTTAAGGAAGGATTACCTCACAATTAATTATTATTTCAATTCTTCTTTTAACCGATTCATCTAAATTTAGATTTTAATCAAGCAATGTTCAATATTTGATATAGTAATTCAAAATATGAGATGTAAAAATTAGAATAAAGCAGGCCGTTCTTTTGTTAAGAAAAAAAGGTTAGAAAAGCCAAGAGTGAAAGCAAGGTTGAGAAATATGAAATTC

>PGSC0003DMG400025895

TAAAATTTTTCGTGAAATATAAGTAATTGTGCATGGTGGTGAGTGGTGACCAATCCCTGCCCCATACTGCCTCAACTATCAACCAATCAACTCCAGAGATCATGCCTACCTTTTCTCAAAAAAATTTAAAAATGTTGTAAAATTTGTGCAATTGACACCAAATCCTACACCAACACTTGGACGCTTTCTCGTGCTCTGTGTAATAACCTTATCCACCCCCTCCCCCCACCCACAGGCCACAGGGACTCTTTCGTCATTCTCACGCCACTGAACCCAATAAACAAAGGTACTTTTGTCATTAAACTAATTTATTCTCAAATAAACAAAAAAGAAAAATCTGTAATTATTCAACAATATAGGAGAAATATAAAAGAGACAATACTGTAGTCTATGATTTCGTGTTCAAACTCTAATGATATACGTATAAATTTGAATTAACCGGATGAATGAATTTCAAATATTATATTATCATACCTAAAATAGAGTATCTTTTACCAACAAAATGAGTAAAGTAATAAAAAATGATGTCTGCGAGTGTACTTTGGAAACCCAAAGAAGAAGTAGCTGAAAAAACTAATACTAATAAAGAAAGAAAGAAAGAAGAAAAGCAGCTTACTTCACGAGCTCCTCTGAGCACACAACAAAAAAGATATTTATATATATGTTGATGAAGAACACGTCTGCTCAATCAAAACCCTAACAGAACTGCAATCTCATCATCTACTTTAATTTGCTGTAGAGAAGAGAGAGAAGGTAAGTACAGATTTCACGGGATTGAGCTTATCATCACTTGTTGTTGCCGTCCCTGTCTTCAACTACCAACTATACTCAATCCTGTGATTCATATTACTCCACGTAACACTTCTGATTCAATATTTTTTCCTTGATTTGAGAGTCCTTTGTTTGATGCTGGTTTTTTTTTTTTTTTGGGCCTTTTAGGTGGGTTGTAATAATGTGTGAGGTAGCTTGTTCAAGTTAAAAAGAGAGGTTGTGTTAAATTATGGATCGGACGGCAGTGACAGTAGGACCAGGTATGGACGTACCAATCATGCACGATAGTGATAGATATGAACTTGTACGGGATATTGGTGCTGGGAATTTTGGTGTTGCAAGGCTTATGAGAGATAGGCAGACTAATGAACTTGTTGCTGTTAAGTACATCGAGAGAGGTGAGAAGGTTAGTTGAGATTTCACCTTTTTTATTGGATTTGTGAAGATTCAATTTGTTCTGCAAATTACTAGTTATTTGTTGATGAATTGCAGATTGATGAAAACGTTAAGAGAGAAATCATCAACCATAGATCATTGAGGCACCCTAACATAGTCAGATTCAAAGAGGTATTATATCTACTCAAGATAGTTGTTCGATGATGATGACTTAGGTATTCATATTTATAGTTAATTATGGGAAATTTGGTTTTCAGGTCATATTGACACCAACTCATTTGGCTATTGTGATGGAATTTGCATCTGGAGGGGAGCTGTTTGAGCGCATATGTAATGCTGGTCGTTTTAGCGAAGATGAGGTAAGTATTTTAAAAAATGAATTTGGTTGTCATGGCACTTATTCCTGGATATATTTATAGGCTATTAACTGTAATTTTAGGCACGGTTTTTCTTCCAACAACTCATATCAGGGGTCAGCTATTGTCATGCTATGGTAAGTCCACTGTTCTTCTCTGCTTTCATAATTGAAAATCTTTTGAGTTTGTGAAATTTTTAATGATTTGCTTGCAATTGATTCTTTTTATGAAGCAAGTGTGCCATAGAGACTTGAAATTAGAGAATACATTACTGGATGGTAGTCCTGCACCAAGGCTAAAGATTTGTGATTTTGGATATTCTAAGGTATTGTTTTCTTCACTCTGCTATTTTCTGGGACGGTTTTGCCACAAGTTGATTCCTAACCTCCCCCAAAGAAAGAAAACAAATTATTGAGTATTGGATTAAACCGGTCCAAGTAGAGTGAAATGGATAGTGAGGATTCATATGCAGATCCAACTAGTTTGGGATTGAGGCGTTGTTGTTGTATATATGCTGTTATATCTCAATTCCGTCAAGTGTTTGCTTCTTTCTTCTATCCTCCCTTCTCCTTTATTCATATGTCCTTACCGTCATTTTGACTATTGTTGTATGCAGTCCTCAGTGTTGCATTCACAACCAAAGTCAACTGTTGGTACACCTGCATATATTGCTCCAGAAGTGTTATTGAAGAAAGAATATGACGGGAAGGTATATCTTCTTTTTTTTTATTCTTACATAGAGCAGATGGCTGGTTGAGTTAGTATTTGAATAATGACAATATTTTTAATACAATGCTGTTGTGTTGAGAAAGAACTAATTGAACATTTTGAGATGTCGATCATCAGTGTGTGACAATGGTGGGAAATTGGTCAGGGAACACAGTTTTAGGAGTGTTGTTCCTTACTTGGTGTGTGAGGACTTTTCAACTTATGCAGGACTACGTTCAGGCTAACGGAAAGTGCAGCTATCTTTAAAATTAATCTTTTCCGATTTAAGGAAAAAGAAAAGAAAATTATATAGATGCTTAGAAACGAACATCTTTTCTTGATGTTTTTTCCACCAGAAATGCTGTATGTTTTTTTTATGTTCCTCTCTTTATTTCTAAACAGGAAAGTATCAGAGAGATAGACAATTTTGTCATTACGAGGAGGCTAACTTAATGTTACTTTTTTGGATAAAGTAAATGATATAGTGAACCATAAGACCCTAATGTGCAAAATCAACATTTCAATACTCACAATTGAGGGACTTGAAATAGTTTACTGAAGTAATTTCAATCTTCAAAAATGTGGTCAAACTTTTTGCCACTTTGAACAGTTCCTTAGCCATTTTGCTTGTATTGATGTTTGTAACAATTTGACAATGCTTTAGTAAACATTTAGAGCAGTAGAAAATTTTCCATCTTGTTATGGTTTTGATGATTTAACATTTCCTTTTTGTAATCTATTTTATTCACTTAACTTTTTTGATGATCTTGTTAATTGTTTTTCTTCATTTTCTCAAACTAATGCAGATTGCAGATGTCTGGTCTTGTGGAGTGACTTTGTATGTCATGCTGGTGGGTGCATACCCTTTTGAAGACCCAGAGGAACCTAAAAATTTTCGGAAGACAATACAGGTAACTTCTTATGCATATTGTTAGTGCAAAATCCTCATAATCTGTCTTATTTGACTCAAATCATTTCCTGTTATGCAGCGAATCTTGAACGTACAGTATTCAATTCCTGATTATGTACATATCTCTCCTGAATGTCGTCATCTAATATCAAGGATTTTTGTTGCAGATCCTGCAAAGGTAATCTTTTCCACAAATAATTTCAGTGTTCTTTCTTCATATTTTCTTTGGTCATCTTCTGTCTCAATATGTTTAAGGTCTTTCTAGTAATTGTCGGTTGTGGTGTTGTAACACTGTGTTGCATTGCTCATAACTATTTGAACTTCAATCCTGACTTGGTTAGAAAAATCTTATACTCAAGTGTTCTGGAGTTCAATGACTTTTGTTAGAAAATTTCTCTTATGTGAGGTTTCTTTCTTTCATCATGCTTTATTTTTGCATTGCGACAGAGGATATCAATCCCCGAGATCAAGAACCATGAGTGGTTCTTGAAGAACCTACCTGCAGATCTCATGGATAATACTACAAACAACCAGTTTGAGGAGCCAGATCAACGTATGCAGAGCATTGACGAAATCATGCAGATAATAACTGAGGCCACCATTCCTGCTGCTGGGACCAACAGCCTTAATCATTACCTCACTGGAAGCTTGGACATTGACGATGACATGGAAGAAGATTTGGAGAGTGATCCTGACCTCGATATCGATAGCAGTGGAGAGATTGTCTATGCAATGTAAAAGATATGTGGATGTCTAAATTGACAACAGATCAAATATAACTAGTTTGAGTTCCACAGTTCTTAATCAACTGGTCGATCTTTTGGTATCCACTGTTTGTATATGAAATGCAAGTTTGCAGCAATTCTGGTTCTCCTCTCTTTGTTTTCTTTAGTGACTGTTGCTGTTATGTATCGCTTGATTTCACTTTCTAATTAATGTGTGTTATTGCAAAAACTACTGTGTAACGATATAATTTCACAGTAATGCTTACTCTGTAGCTCTAAATTTTCTTCTGTTACTAAATGTAAAATAGTTTTAATCAAATCTTGCATGCATGTGACACTGTTTTGTGAATTGCTTATACATATCTTTCATTTGAATAATAAAAAAAAACGTGGTTACATCTATCTTCTATTAAATGATTACTAGTCATGACTGATGTACAAATACACCTTTACTTTGCTAATTATTTTGTTTTTTAAGTATTAGATGTCATAATGTTGATATGCTATACACAAAGACCAAAGTACACCAAGACTAAAAAAGCTCTTCTTCCGAATCCCTTCATTCCATCTTATAACTTGTGTTTCTCATATAAGGTCCCGAATAACTCTGTCCACTTTCAGAAGTATGTAGCAAGTTCTGGTAGTTCTTCATTTAATTCTCTTGTTACTCCAGTCCCTAACTTGACACCCTAAACAACATATATTTGTATAATATCAATAGGATCAGAAGTTGTATCAAATCGGAAATAGTGACATGTTTCAATTCAAGCTTACAGATTGCTTACTTAAGGTTTATGCCATTCTTGTTTCTTAGGCTTCCTGGAACACTCCCTTTGAGACGGTTGTTTTGGAGAAACCTATAAAACCAGAAATTTCAGTTATGGACTTACAGAAATGAACATTAATCATCAAATATACAAAATCAAAAACTGAGTTAAGTAGATAAAAAAAACAAAGCAGCATACACTTCTCGAAGTTTAGGTAGTTTTCCTAACGATTCAGGGATTGATCCT

>PGSC0003DMG400023636

TATTTATGTATCAAGAGCCTTGTCATTTCGAATTCCGTTGAATGGTTGTAGTTTCGAGACTCACGTCTAGAATAGCCAGGTGAGACATTCGGTTCATGAATCGGAAACAGTTGTTGTCTTTTATCACCGATAAAAATTGTGGTGGAATGAATAAAACTCTCCTGTCTTAATCAATAATCTCGAGTTCGAATGCAAGTTTGGAGTTGAAAAAGGAAATAATGTCATGAAATGTTTTTCCCTTAGTAAAACTTATATAACGTTAATTTAAATCAATCAATTTTAATATAAATACTGAATACCCGCCGAGAAGCCCACCCAAAGAAGCTATAGACTATAATTCTCGTATTTGACAACTAAATTATACTACCACGTTTGAGTTGTGCAACTTGTTTTTGCCTTCCTTTTAATTTCAAGTCATTTGATTGGTGGTGCCCTAATAGGCTGGGTCCCGATGACATATCAAGGATTCAATCGAATCTCCTCCGTTAAAAAATTATAATATTTATCGTCTATTACATAAAACAAACATTTATATGTTCAAAATAATCTAACTTTAAAATTTTCAATTTTATCTTTAATGACATGATTTTATAACCACGATTACAAGTTTCATAATTTTTTTTTTTTTTTTTAACTTTATGTTCGGTCAAATGTCATCACATTAAAAAAATAAAAATACTTCTAGTCCATTGATGACAAAAAATGTTCAAAATTGTTTTCTTATAAATTTCCAAGTATGACATTTAGTATTTTTTTTAAAAAATAAATATTTAAAAACTTTGGCTCCGCCATGGTCCCACCATTTTATGCTCCTTACAAATATAGGAAGCATGTCATTGAGGAAAAACCAAAAATCACAAAGAAAAAAAGTTTCTTTCTTTCCAGTTAGCAAGATATAGACAGCTTCTTCAAAATTTATTTCTAAACAAAATAATTTTTTTATTTTTTTATGTATGATTATTATTTGAGGTATTAATTGAAGCTGAAATATTTATAAAAAATGGAAAGATATGAAATTCAGAAAGACATTGGTTCTGGGAATTTTGGTGTTGCTAAGCTTGTGAAAGATAAATGGAGTGGTGAACTTTTTGCTGTCAAATATATTGAAAGAGGCAAAAAGGTTTCATTTTTATCTCTCTTTTTCATCCAATTCTTTCAAAAACGAAGCTAGGATTTTGAGTTTATGAGTTCTGGATTCTTATAAATGATTTATACATGTTAAGTGGATTTTTAAATCAAGCTATTGAGTTCTGTCGAACCTGGAGATAAAAAGGTTTGATCTTTATCTCTCTTTCATCCAATTCTTCTTAAGGTGGAGCTCGAATTTCGAGTTTATGAGTTTTGGATTGTAAAAAATGTAACTTATTGGATTCTTGATAAGTTATTTATACGTATTAAGTGAATTTTTAATCATAAATACGGCGTTTGAGCCTAGTATAATTGGGTTCTGCAGAACCAGTAGGTGAAGTTGTAGCTCCGCCCCTGAAGTTCTGATCAAGTTTCTCATGATCAGTTTTTCTGAATAAGTACGGAGCTAGAGTTTTGAGTTTATGAATTTTGGATTATAGAAAACAAAGTTTACTGGATTCTTGATAAATGATTTATACATATAAAGTGGATTTTTAAACAGAAATATGGTGTTTTGGGCCTAGCTATTGAGTTCTACCGAACTTGTAGGCAAAACTGTAGCTCCGCCCCTGAAAAAGTACATATTTGGATATATTGAACTCTTCATATTAGGCTGTTACAGAGTTTTTTTTCATTGAAAAGATTATTTTTTGTTACTCTGTTGATTATTTTTTTTCTTCTTTTAATTCATTTGGGTTTTTCTCTGCAGATTGATGAGCATGTTCAAAGGGAAATAATGAATCACAGATCTTTGAGGCACCCGAATATCATTCGATTTAAGGAGGTAATATTTCAGTATTGAATTGATAATGAAATAGAGAAAAACTCGTGATTGGTGTGGAGGGGAAGATTTGTTTATCATATAGTTCAAGAAAAAGTCGTCTCATTTCCTTACTTCGGTCTAGTTTATTCACTTCAAGACTGGTTATGAACGTCACGTAACATCACTCTCAACCAACCTCCACCGTACTTTTGGTTATGTAACCTGGCTTTCTTGGTATGCGAAGTTGGTTATGTAGGCTGACAACTTTTCCTAGGTTGTTCTGATAGGGAAAATAATCGATTAGTAGTACGTTCGAGATTGCCAAAAAATTGTGCTTATTTGAAAGTTAAAGTTGGAGATTTCCCAAAAAATGGCCACTTTGAATCAGATTCCCTAAGTGTTTCTGTCAACTGTTTATTCCACACAAAGTGAAAGTTGGAATTGAATGTTTTTTCATTCTCTTTATTTCTCCACTAAGTGGGAATTTGGAATCATGTAATGAAAAAGATATTTCTAACCAAATTGCCTTAATATTTGATATTATCATTGATCTTATAGTCTCCTTAGTACCAACTTTTTAAGAAGCCTAAGATGCTTTATCGGATTATGTTTTGATTCGCGAGTTGGTTAATTTTACTAGTCGATTGGTCAAAATCCCGGTTCTTGACTTTGTACATGATTCATTTTGTAATTCTCTGTTACTAAGAGGGCTTAAGACTCTGGCTAATTTGATTGAGATGTATAGACCGGAATGCTTATGCAGGTTTTCGTGATTGTGCAGGTATTTCTCACACCAGCTCATCTAGCTATAGTGATGGAATATGCTTCTGGCGGAGAGCTTTTTGAACGAATATGTAATGCTGGAAGATTCAGTGAAGATGAGGTAACACAAGAATCAAGATTTTAGAATTTTCATTTGTTTTTTTGTGATTGTCATTGCTCCATGTAACAGTAAGTCACCAAATTAGTACTACTTCATTTTTGATGAAAATCAAGTGATAAGCGGCTGCTTTCAATCTCATTCCAACGTGTAGCAGTTATTATCTCGAAACTTTATTAGATTTGTTGAGCTTATCTGTCCAAATTGATGTTATTTTTCATCAGGCAAGATTTTTCTTTCAACAACTTATATCAGGAGTGAGTTACTGTCATTCAATGGTATGAATTAGGCCTATGTTATAAGTTTGCTTCAAATTGTTTTTATTCGAGTACATTAATTTACTCTTGGATTATTAGCTTGTGATGTGATCGTTTTCTTGAATTGCAGCAAATCTGCCACAGAGATCTTAAGCTTGAAAACACATTGTTAGACGGGAGCTCTACACAATGTCTGAAAATATGCGATTTTGGCTATTCTAAGGCAATGAAACAGAACTATATGTTCAATCTTTTGATTGGTTTTGTTTCTTGATTCAGTTTCTGAGTTGTGTTAATTCATTGTTTTAGTCAGCAGCATTGCATTCACAACCAAAATCTACAGTTGGAACTCCGGCCTATATCGCACCAGAAGTCTTATCAAGAAAAGAATATGATGGAAAGGTATGACAATTGACCTTTGACATACAACTTCAATTCATAAATTGATTTGTTGACCTAATCTTCAAGATTTTTATGTTATTATTTTGATGTGAAGATAGCAGATGTTTGGTCTTGTGGGGTCACATTATATGTGATGCTGGTTGGTGCATATCCGTTTGAAGATCCTGATGATCCAAGAAACTTCAAGAAGACAATAACTGTAAACTTCTGCTTCATTTCTTTAAAAACCATAGTTGACTTCTTGATTTGCTTGTTAATTTGTCTCAAAGATATGCATTCTTTGACTAGATCTCTACACAAATAGCCGGCCTGATTTATTGGTTACTTTTCCTAGCTGGTATACATAGGTTATACACATCTAAAAACATATATTATACATCTGCTGGCTATTTTTAGTTTAAGCGGTTGGATGGGCAGCTATTTAGTCTAATTCTTCTTTTGTGTGACAGAGGATATTAAGCGTACAGTACTCGATCCCTTACTATGTCCGAGTGTCCAAGGAGTGTAATCACCTCTTATCTCGGATATTCGTTGCTGATCCTGAGAAGGTTGTTACTAGTTCTTAAATCGTTTTGATTTTGCACATTGATCCTAGAATATGCTAATCTTCTAATATTATGCTTGTAGAGGATAACGATCGAAGAAATAAAGAAGCATCCATGGTTCTTAAAGAACTTGCCTAAAGAATTTATGAAAGGAGAGGAAGCTAGTTTAGTACAAATGAATAGTGAAGAAAAGCCATTACAAAGCATTGAAGAAGCATTGGCTATAATTCAAGAAGCAAGAAAACCAGGAGAGGGGTCTAAAGCAAGTGATTATTTTGTTCATAGCAGCATTAGTATGGATCTTGATGATTTTGATACTGATGCTGATTTAGATGATGAGATTGACACAAGTGGTGATTTTGTATGTGCATTATGAGTGCTACTTTTGAACCAGGAGTCTTGGAGCAACGGTAAAGTTTTCACATGTTCAAGTCGTGAAAATAGTCACTAATGCTTGCATTATGATAGACTGTTTGCATCACACTCCCCTACTAACACAAGATGTTTTGTGCACCAGACTGCCCGTTATTGTGAGTGCTACTTTTCTTGGACAAAATGAAAAAGTAATTGCTATTTTAGTCTCTACTTGGCCCTTTAGTGTTTGTACTCTTTTTGTATTTTAGTTTCATAAATCTTCAATTTGATTGGAAGTTGAAGGTCCTTTTTAAAGATACTATATTCAAATATAGTACACCATGTATGACACCAAGGACTCCACGTAGTTTTGTTTGTATTGTTTGAAATTGTAAATGAAAATTGTGCAACTAGTTTTGAGTGTAAATTAAATATAAGATCATTGTCAAGTTTATAATATTACTAAATACTTAGATTAACTAATCCATAAAGAAGTCCTAGTATTGGACCTTTATTAGTACAAGGAACATATAATAAGTAGATTAATTGCTAACCATTGGTTATTGACCTTTGATTTTATTGAGTTTTTTTTCCCCTTTAATGTTGATATTATTTAATTTGTAGAAGTCATTATCACTTTCTATGGAAGGATTTTGCAAGCTGGTGAGACATGGACTTTTTAGCTATTTTCTATCGAATGTCTTGTCATTCAATATAATAATTATTTTCTCTTCTTCGAGTTATATGATATATTTTTTTATTTTTTTTCAAAGAAATGATATATTTTTATATATAAAAACAATTGAACTTTAAACTTTCGTTTTATCCTTATTCAATTTTAGTGAACGAGACCATAAATAAGTTATAGCTAGACAGAATATTTATATTTTTTTTAAACCTCAATCAAAGTGCATCACACATCTTTGAGCACACATAAATTGTGATGAAAACATTTGCTGTCTATATTTGTAAATAATTCTTTTTAAAAATTTAATATGCATATAGAATATGCTGACATTGTT

>PGSC0003DMG400030830

GTCAAGGTCAATAAATAAATAAAAAATGTACTTTTTTCAAGAAATATAAAAAGGAAAATGTTTTTTCGGCATCTTCACACGCCCATGGAAAGTGAATCACAAACGATTTGCCATGTAGGCAAAAAATGCTTAGACGGTTCAAATCTGGGGTGGTAGAGGTGTTCAATAGGTACTAGGTGTAGTTGAGGTGTCTAAATGAATAATGAGGAAAACTTTGAGGGGTCGTAGATAACTTTGACCTTAATAATTTTACCACTAGATCACCACCCGAAAATACCACAACCATCCATTTATAAATCACCTTATACACTTACTACTCAAACTATTATTACAGTAGACAATTGCTATTAGGGAGATCTTCTGTTAGCTCAAGATTGTTACGTAGGATTGAAAGATTCAACTAAAGAAAAGAAAATTAGAAAGGAGAATGAGATTTTCTCATTTTATTTGGATTGATTACAAATGACTAAGATCGTATTTATAAAAAAAAAATACCATTGGTAATTAAAGGTCCTATATCGTTGTACGGTCTAAAATTGTGTTCCAGTTTGATCAAAGAGCCCAAATTCAAAGGAGCAGGACCAACATGAAGAACTCCAATCAATTTATCCACCAATCTAATCACAACTAGTGGCAACTTTTCTGCAGATCTGACCAGACTTTTCTTCTCTTTTTTTTTACATATAATAAAAATATGTACCTGGACTAAGTTTTAGATTCCTATGGGTAAATTCTTATTGAAATTCACCAATTAAAGATAAATGTAAAAGTTCTTCCTACCCACTTGAGCTAATTAGTGACGACAATAGTTGAACAACGTAGTACCTGCCAAGAATGTGTGTCCAATAAACTCTCAGCTAGTAAACCTCTGAATAATTTGAACCCCTTTTGCTCTTACTGTTGAATATTGTCAATTCTGTAAGTCAATAACAGTAGTGGAAAAAAAAAGTTTGATTCTTTCTGAAGAATCTGAAACCCCTTTTGTTTCTTAATCTTGATGATGGAGCGTTATGAGATAGTGAAGGAGTTGGGTTCTGGTAATTTTGGAGTAGCAAAGCTTGTTTGTGACAAGAACACTAAAGAACTCTTTGCTGTTAAGTTTATTGAAAGAGGCCAAAAGGTTTCCCCTCTTTTATAACCTTAAATTTGATGTTTTCACTCTATCTAGGGTACTTTATTTATTTGTTTGTTTGTGTTTTGAGGTTGTTTGCAGATTGATGAACATGTGCAAAGGGAAATCATGAATCATAGATCATTGAAACATCCAAATATAGTGAGATTTAAAGAGGTATATGTTCTGCTTCTTACTAATGGAATGAATCTTCTTTTATCTTTATTCTATAATATGCTTTGATACCTATGGGGGTTCTGATTATTGCTGTCATCAGACTTACAAGGAACTTTCATTAAGTTAAAGATCTTTTTTTTTGAAAGAATTCTTGAGGTATGGAGATAGCTTCACATAATGATAGGATCAAAAATGTTAATGGATCTAATTACTAGAACAGAAGATCTCGTTTGTAATGTAATGACAGTAACAAGATTCTTATTGTTATGTGCTAGTTGTCCCTCATCGGATAAAAGAGAAATGGGAAATGCTAATGAGTGTATAGGCCAAATGGGCTCCCCACCTATCAGACTAGTCTTTTTGGTTGGCCTCTCCTGTTTGGTCTATAACAACTATGTTATGTTATGATTTCTTTTTGAAGAACTCTGTTGCTCGCAATTAAATGCAATCGTATCTTATGGAATAGATTGAAAGCTATCAACTTTTCTGGACAGTAGCTGTAATTTGATGATTTGCATATTATATTTTGTGGTTGATGATAGCAATTTACAATTAGTAGGATTACAGTGGCTGTTCAAAATAGTTTGTTTTTTTACATGAATGGACTTAACACTTCTAGGTCTTGCTTACACCTACTCATCTAGCAATAGTAATGGAGTATGCTGCGGGAGGAGAGCTCTTTGCGAGGATCTGTAATGCTGGAAGATTTAATGAAGATGAGGTAAGGATGATACTTACACTAGATCCCGCAATTAGTCTTGGCTTAGAACAAACAAACTGTGTATTTTTCATTTGATTTTATAATGTTTGCTTATGATAGTCAGATCTTTGCTGCCTCTTTACTTGGTTGGGTTGGGTAATATGCCTTTTACATTCATGGTAAAGTAGTAGTCTACCATGACATTCATATGGTCCTTTGATGTGGGAAGTCCGCTGGGTAGTAGTCTACCATGTCATGGGCTCTGTTGATGAGTGAAACTAAACTCTTAATTTCCAAGAATGCCGTCTTCTAAGTTGAAAGCATAGAGTGATATGCAATGAGAAGTGTCCCTTTAAATATGTTATCAGTTGACTATCTTCTTGTGTTTATGCCAGCTCTTCCGAGCAGACTCTTTTCTAGTTTAAAATCATCTGAGTGGCTTGGTTATATTTATTTTTTACTCAGGCAAGGTTCTTCTTTCAACAATTGATATCAGGGGTTAGCTACTGCCATTTCATGGTACAGCATCAATACTTCTCTTGCTGTGAACTTCGTTCAGTCCTCCTTTTGGGACTTAGTATATCTTTGGTCTTCCGTTGCAGCAAATCTGTCATAGAGATCTCAAATTGGAAAACACGTTACTTGACGGAAGTGCTGCACCACGTGTCAAAATATGTGATTTTGGGTACTCCAAGGTTACTGATCTTTTCTCTAGGTCTTATCAATTCTTACATCTATTCGAGATTTAAGTTCATATAGAATTGCTACATTTGTTTGCAGTCATCCGTCTTTCATTCTCAACCTAAGTCCACTGTGGGGACACCTGCTTATGTAGCACCAGAGGTCCTAACAAGGAAAGAATATGATGGAGAGGTGCTACATCTTTCTTCTTTGTCATAATATTTGTAGTATACACTCATGTCTCCTCCTGTTAATTCTTGAAACTATAGCTTGCAGATGTTTGGTCCTGTGGAGTCACCTTATATGTAATGCTGGTTGGAGCTTATCCATTTCAAGATTCAAGTGATCCCAAAAACTTCACAAAGACTATTTCTGTGAGTGATGACAACATAATCAGATTTCTAGTAGATGAAAAAGAAGCATGCTTTACTCTGAATTTAGTTTATCAGTTTGCTGCATTTATTGTTCATGCAAGTGTGAAGCATTGAAAAATTTACTGCAGCATTATGATTGGCTAGTTTCCATGACAGAAAATACTCACCGCTCGCTACTCAATTCCTGAGCAAATCCAAATTTCCCTTGAATGCCGCCATCTCATAGCCAGGATTTTTGTGGCAGACCCTGAAAAGGTAATAAAATCATATGGTCTTCTGCTGATCATAGAATAGTTCATGCGTTGTGTCCAAGTGAAATATCATTATCAGTAATTAACTCATTGTCTCGATATATATATATGTATTCTGCTTAGGTGCAAGGTTCGTTCCCACCAAAGGCCTTCCATAAACTTGCTTTCGTGTTTCTTGTTTCATGTTATATTGCGAATATAATGTGCCAAAACCATAAATGCATGCTGATTATACTTTTGCAGAGAATAACCATTCCAGAAATAAAAATGCATCCCTGGTTTTTAAAGAACTTACCAGTGGAACTGATGGAAGGAGGAAGTTACCAATGTGCCGATGTAAATAACCCTTCCCAGAGCATGGAAGAAGTTTTGGCGATAATACAAGAGGCTAGAGTTCCTTTGCTGGTTGGAACACATTCTTATGGGGGCAGTATGGAACTTGATGAATTGGACGAAGCTGATATTGAAGATGTAATCGAAACTAGTGCTGATTTTGCTGGTTTACTGTGATGTGTTACACAATGCTGTAATTACCTTAGGGTGAAAGAAGAGAGCAATTGGTAACTGCTGTAATATCCTGAGTAGACACTCAAGAGTTCACCCTTTAAGTTGCTCATAGTTTTTTTTTTTTCCTTTTGACTAAAAGGTGTTTACTACTTTTGAAATCTTGGCTTAGTGGAAATCTGGTCTTTAAACACACACTTGTACCACAAGATAACAGTAGTTTTGTTTAGATTTCTGGAATTAGATCGTTTTTTTTTACACAGTAGAATTTTAGTGGGTGATCCTTTATCGTATATAACAACATTCATATAGGTGAATACAGAGTGATTGACTGAAGTTGCTATTTGCACCAAGTTATCAGTTTAGTTGCATAGATGAGCTGTTTTCGAAAGCAGGACGTGAGTTAAATAAGAAGTCGTAAGAACAACCACCAAGGCTAAGCCTCCTTACTCCACAATCATTGTTTCAAGTTTTTGGTAGATAATGGATTTTGTCAAAGTTTTGAAGAAATGATCAGTAGGAAAAAGGGGGGGAAATATCAATAAATTGCCTCAAACAAATGTTAAGTGCACGCTCGATTGGACTTATTCAGCATTCATCAATAAAACTGAAAGATAAAAATTAAAAAGAACAAAAGAAAGTTCAACAGGAGCCTGTATAACTATTAAGATCTCCCCATACAGCTTCCTCGACTTCTTCCCATGATTTTGCCATCTTCAGTTTCTTCCAAGTACTGAAAATAGCTCCCAGAACATTTTCATCATGCAAAATACAGTAGCACCTGTACAGATAGAATTCATTGAAAGTGAGTTTAAACCACTACTCCATGTACGAATAAGGTGCGACAGGGGGAGTAGTAATAAGTCATATAGAATCAATGGATTCATGAAAAAGTAAAACCCATTGATGACATATTTTATCACAATTAATATTTTTTGGCTAATAGAGGGATCAAATGGTATATAGTTCATTTGTTGGTAGCTTGGAGCATTAAAAGCACACCTCT

>PGSC0003DMG400023441

AAACTAATGAATGGAATAAGGTTTCCTTCATCTTTAACTAAAGTTCTCGAATCCTGGGTACGGAATTGCCAAAGTGCTTTACCTCCTTTCTGCCTCCTTTCCAGTGCAAATCCCGATTTAATTGGACTCCAATATAATTACTAGATATCATTAATTACAATAGATAGCTATATACACATACTGAAGAAACATAAAGCTGATCAGAGTAACATGATCATTATCTGGGAAATGTTGAGATTCAAATAATTCATAGTTATATAGTATGATATATCCAAGCGACCGATTGACCAATAATAATAATAATATATAAATTGCTTAGCAGAAAAGATATATACTACTACTAGTATATAATTATGGTGTAAAACTAAAAGTCAAAAATTGTGACAACCCACATCTGCACTTCCTTGGAGAGCGGCGTTTTTGTAGATCCAAAGCAAATCAATGACGTCTCTTCAACTTTAATTGTCATCATCATGTATACACTAGCTCTTATGTTTGGTTGGAGCCTGGAGGTATTAGATAGTAATTGTAACTTATTCCCAATCAAACGAGTTTAATGTTTTCTTCCCACTAGCTGATTAATTTGTTTAAAATATATATATGGAAGTGGTGATGAGTGGGACTTGGAGTGTGGAATCAATCCCATACCTCTTTACTCTTTACTCCTCCCCGGCCCACCCCCACGTGCTCTTCAGTAGTGTACATGTTAAATATTAATCAAGGGCAGGCTTGATGAACTGCACCTTACCTTTCATATGTATATATATATATATATATATGCCAGTTGCCAGTTGCCAGTTGCCAGTTGCCAGTTTTAATCACTTCATCATGTGTCATCCATAAGTCATAAACTCATCAATCCCACTAGCCACCTATAAATACATCACCTGATCAATCATTTACCAATCTATATTGCCCATATATACAACAAATCCATAGTATATATATATATATATTAATTATAGTAGAAGCTGCAGATATATCGAATTAGCTAGCGACGATGCAGAATTACGAAGTTGTGAAGGAATTGGGATCTGGTAATTTTGGTGTGGCCAGGCTCATGAGGCACAAAGAAACTAAACAGCTTGTTGCGATGAAATACATAGAGCGAGGCCGCAAGGTACCCTGCCCTGCTCTGTCTTTCTGCTTATATATATAAATAATACTAATACTAGTTTAATTTGTTGTAGATTGATGAGAATGTGGCGAGAGAAATCATAAATCACAGATCATTGAGACATCCAAATATTATTCGTTTCAAAGAGGTGTTATTAACATCAACACATTTGGGAATAGTAATGGAGTATGCAGCTGGTGGTGAACTCTTTGATCGCATCTGTCAAGCTGGGAGATTCAGTGAACCTGAAGCTCGATACTTTTTTCAACAGTTGATCTCTGGAGTCCATTACTGCCACAACATGGTACTTCTTTCATTTCTATTATATAGTAGCATCGATCAATCGGTCACTGATAATCAATATTGTACAGCAAATCTGTCACAGAGATCTGAAATTGGAAAACACACTGTTAGATGGAAGTCCAGCACCTCGTCTTAAAATTTGTGACTTTGGTTACTCCAAGGTACTTACATATACTATAAAATTAATTTCATATATTTTCTATATATACCGTACTGATTTAGTCATGATCGCATAACAGTCGTCTGTGTTGCATTCACGTCCCAAGTCGACTGTTGGAACACCAGCCTATATTGCACCCGAGGTTCTATCGCGCCGTGAATATGATGGCAAGGTAACATAGTATGAATCTTTTTGTTGGTTAAAATCTAAGTGAAATACTATACATTGAAGCAATGCAAGTTGTAAAAACAAGCATGTGAAGTTTGCATTATATCATGTGATGGTGACTTATAAAATAATCAATGGACCTTATCCTTGAAGTTAATTATAATTTCTTGAGTGATCCTACTTTCAGCTAGTTAATTAAACATGTCATTTTATTGGTTAAGTATACCTACTCTCCCGGCCTTTATATATCTAATATTTTTTATATGATATTAAAAAGATCTTTTCCTTTTTTTATTAGTCTGCAGATGTATGGTCATGTGGAGTGACTTTATATGTCATGTTGGTGGGAGGATATCCTTTTGAAGATGTAGATGATCCCAAGAATTTCAGAAAAACTATTTCAGTGAGTTTTCTCTCTTTTTAAATAAAATAAAATAAATTGACATATGCACTTTATAATATATATTAATTAATATATGGTGCTTTTCGTTTTTGCAGAGAATAATGGGTGTTCAATACAAAATCCCAGACTACGTCCATATATCTCATGATTGCAAACACCTTCTTTCTCGCATTTTTGTTGCCAGTCCTGCCAGGGTATTATATGATTCATGCAATAAACAATTGTAGTATAATTTATGTTACAACAAATGTGAAATCTATAATATCCAACTTTAAGTGTAGACATTTGAATTTGTATAAAGTTGAACACTAGTATAGGACATGTCTGTCTATCTACTTGTTCAACTTTAAACATGTTTTAGTGTTTATTTATGCACATCCGAAATTGGAAGGCATAGATGTTAGCTGAGGTCAAACTAAAAGGGAAATTCATGTATTATGCCTTCATTAAATGTTGACACTGCTTACTAAAATTTCTACATATGCTGTTGTGTACTAATGTATGATATATGTTGGGGCAGAGAATCACACTAAAAGAGATCAAGAATCATCCATGGTTTTTGAAGAGCTTGCCAAAAGAACTTACAGAATCAGCTCAAGCAGTATATTACAAAAGAGACAATCCAACATTCTCCCTTCAGAGCATTGAGGAGATCATGAAAATTGTATCAGAGGCAAGAAATCCACCACCTCCATCAAGGCCAGTTCCGCACTTTGGCTGGGGAACTGAAGAAGAGGAAGAAGACGGAGAGACCAAAGAAGAAGACGCGGAAGAGGATGAAGAAGATGAGTATGAAAAACAAGTTAAGCAAGTTCATGCAAGTGGAGAGTTTCATATCACACATGATGATGCTTAAGTTTACCATTTCTCATTTTCAGTATAGTCTCTGATTTTGATGTTCTGTACAACTAATAACAGCACTTGGCCTATTTCCTACCATCAACAAACTTTTCAAAAATATTACTAGTATTCCTTCCTTGTATTGAGATTGCAGGGTCAAATGCTTCTATCTGAAAGCCTAATGGAAGGATATCTGCATACTTCTTTCTAAGAAGACATTACACTTAACACTTCACCAGAAATGGGGTTTTCCTAACTGGATATTCCTTTTCTTTCCTTTGGAACTCAAAGCTCTGAACATAATTAGCACAACAAAGACAAGAAGCTGATTTACACCCTTCTAATGGAACGGAAATGATAAGATTGTAATTCATCTCTAATAATCAAACATTAGACAACGGTTGTCGCCCAAAGTTTTAACCAAATACTGAACAATGGCATACCACAATCCTACAACAATTAACTCATTATATTCTCATGACAATCCTCCAAAGAGAAAACAGAGGAAACTTCATACATGCAGATGTCCTTCCCAGCCATCCCAACTACAAAGCGAAGCCCTTCAAATATTCTGGATCTCGCTTTGCACGTTCCTGGAATTTCTTGAACCAGCGATCTAATATATCCATAGGCACCACCAGCTTACTGCCATCCACTCCACAAAATGACTGCATAAAATTGAATAAATTCTCGCCAACTTTCAGTGCCAGCCTCTCAATTTTCTGCCCTGCAGCCACATCAAGGGAGGGAAGTGTTGCAAGATCCTCTACTGAAACACCTATCTTTGCAGAGATTGGAGTTGCATCAGCAGTGAGCTGCAGTTGCCCACCGGGCTCTGGCCAAGGAAGCGACAGCACAGCAGAGGGTCGAGTCAGTGTGACTGCACCACAAAAGAGGAAAGGTGAGCCAGGGGATTGGATATACACAGCGAGGGCCTTGTCTGGTGGCAGCGTGAGGTTGTTGAGGAGGAATATGCAGACCTCTCGAATTGAATCATATGCTTCCCCTGCACATGT

>PGSC0003DMG400026211

TTGGATAATATATTCTTCAATGCTACCCCAAAAGTCACAAACAAGGGGCATTTTCGTATCTGACCAAAACGATTGTGGTACAGTAGTAAGACTATTTCATTCCTAATTAAAAGTTTTGGTTTTGAGCTTTAGGTATTAAAAAAAATTATGTTAAAAGCGTTACTCTCTAATGAATCATGCAATGCGCTTCCAAATTTAATTAAAGCTACAGTACAGACATCAAACATCGAATATAAAACCAAAAACAAGGGTCCCTTTCTCAGCCCACGTTTATTAAAATAGTTGAATTTAAAAATTTACTTTATTTTTTTGGTACATTGAATTCAAAAGTTTATCCCATGCCCAAACTTAAATAATTCATCCACCTCAGCCATAACCAAGCACTTCAACACTTATTGAGCTTTGAAGCCAACCCCTCAAATATTAAATTTGTTAAATTATTTATACGTGCCCTTTAGTTTAACCAATAGAACATTAGATTCTCCAATTGTTTTATGTACATACTTCAACTGATTTTTGCATTTTGTCATCATTTACTATAAATTGCAGTATAACTGAGAGTTGTTGAAAACAAGGATTGGTTAGAAACTGAACTTTTGGTTGTTAAAGGCTGAATTTTTTTGTCTTTCTTGATTTGGTTGGTGAAAAAAGATTTGAGTCTTTTTTGTTATTCTTGATTTGGGTTTCTCTTTTAAAGGAAAAATATTGAATTTTTTTTGCTTATTCTTGATTTGGGTATTTCTTACAAGGGAAAAAGATTGCGTTTTTTTGGTTATTGTTGATTTGGGTATTTCTTACAAGTGAAAAAGATTGAGTCTTTTTGGTTATTCTTGAGTTGGGTATTTCTTTCAAGTGAAAAAGATCGAGTCTTTTTGGTTATTTCTTTCAAGTGAAAAAGATTGAGTCTTTTTGTTATTCTTGATTTGGGTATTTGTGTTCTTTTGAGTTTGAGATTTAAGAGCTTGTGGGTGTGTGATTTAGTGATTTTGGATTGGATAGAATGGAGGAAAAGTATGAGCTTTTGAAGGAACTTGGTGCTGGGAATTTTGGAGTAGCAAGGTTAGTCAAGGATAAGAAGACAAAGGAGCTTTTAGCTGTCAAATATATAGAAAGAGGGAAAAAGGTAAGTTTTTTTGTTATCAAAAAAGTTTGTTTTTGTACTTTTGTTTGTAGATGCTGTCATTATATTTCACTTTGTTAGGTTAAGGATTGTGTTGGATTTGCTTATTTGGTTGATTTTTCTACTGAACTCCTTTACTGGTTCTACTTTAGAATTTGTTAGTTTTGAGGTTTTGGGACTTCTGAGTTTGCAAAGTCAGCTTTTGTGTTTTTTGCAAGAAGTGTTTGCTTGAGCTGAAATGTTGCAAAAAAGAGTTTTTAAAAACTGATTTAGGTTTGAAATGCAGTTGACATTGTGTTGTTGCTCATTCAACTATAAGCCAAAGTAAAAAGGAGCAATTTTGGCCCATTGCAAAAGCTTTTTTAAGTACAATATTTTTCTCCTCATTTACTAAGTTGTATCAAATGCTTTCAGCCTGATGTAGTAGTTATTGTTACAGTATGTAGTGTTTTCTCCTTTGATTCTGTTAGCCTGATGTAAAGGTTGCTTTATTCTTGTCTCAGATTGATGAGAATGTGCAGAGAGAAATTATAAATCATAGATCGTTGAGGCATCCGAACATTGTTAGGTTTAAAGAGGTAAATACTTCCTTTATATTAATTTGTTATGAACCTGAGAATTGCATATATCCATCTGATGTTTACTGTATATGTTCTTTTTTATAATCACATTGTAAGCAACATTATTACCCTTTCTGAAACAAAAGGATTGGACTTGGTAGAGCTTAGCTTGCCAGTTTAGAGATAGCGATCACTATGCTTGTATCGAACCTGGATAGATATGATTATCATATGGGGATGACTTCCCAGATTAAGTGTAAATAGAGATAAATTGCATTAGTTCTCTTTTGTTAGCAGAGTGTATATTGAGTATTTAGCCGACCCTAACTTGTTTGGGACTGAGGCGTAGTTGTTGTTGGAAATAAAAGTACATTTCAATAGTCCTGTTGTTAAGTAAGCAGAGTGTATATGAGTAATTCCTTGCAGTTATTAACTTAAAACTTCAAAACACAATTTACAGGTCCTGTTTTAGTCTATATAGGCATAGCAATTACATAATCCCCTCTTGAGCAACTGTAATATTGAGTGTTAAAGATGGATTTTTGTGGAATGCTAACGATGAGTTCATGGGGATGAAACTTTAGTTAGAGATATTTGATTAACCCAATTTTTGTTGTAGTTGGCCTATGGATTGATTGTTTTCTTCATCTCTTTCTTGTGCACACTATGTACGTTTTTAGATTGCTGAGACTCTGCAATCTAATACACCTATCAACTTCTGTTCTGTTCTATTATTCTCGTTTTGCTGTTCATACTTGTCCATTAGACTACTTGGCACATATATTTAATACAATGTACTCAATGGACCATTACACATTCACAGTTACATGGCATACTCCCTTTTTTGTAGGTCCTGGTTACTCCGTCGCATTTGGCAATTGTTATGGAGTACGCAGCAGGTGGAGAACTTTTTGGTAGAATATGCAGTGCTGGCAGATTTAGTGAAGATGAGGTTAAGCAGATATCTCTAATTCCTCATATTGTTCTATGTTTGATGAGAATGATCATGTTGAACTAATTAACTTCATTTTTTGTGTCAACAAACAACAGGCTCGTTTCTTCTTCCAACAGCTTATATCCGGTGTCAGCTACTGTCATACCATGGTAAATTTGAAAATACATAAACATCTTGTTAAATGCTCATCTTTCTAGTCATTCTCAAATCGTGTTTTTACTTTCTCGTAGGAAATTTGTCACAGGGACTTGAAACTGGAAAACACTCTTCTTGATGGAAGTCCTTCACCACGTTTAAAAATATGCGATTTTGGTTATTCCAAGGTTTGTTCTTGACTTCTAGTTATGAAGTTTACTATGCTACATTTAACATGATGTTAACTTACTTGTATATACTTTATCATGCAGTCTGGTTTGCTGCATTCACAACCAAAGTCGACTGTGGGAACTCCTGCTTACATTGCCCCCGAGGTCCTGTCACGAAAGGAATATGATGGGAAGGCAAGTTCTCTTCTCGGACATTGCTTTAGTTTTGTCACTGATTATTACATTTAACACGACGCTAATATATTTACATATTCATAATGCTTAGTTACATGACTGTATTCTTTTCTTGTTGTTTCACTTGAATGCTGTGCTCATGTTATCGTTATGCATTTTTCAGATCGCAGACGTGTGGTCATGTGGAGTGACACTATATGTAATGTTAGTAGGAGCATACCCTTTTGAGGATCCTGAAGATCCGAAAAACTTCAGGAAAACCATTGGGGTGAGTTGTGTATACTTCTTTCGAATGTTTGAACTAAGTACAAGTGTGTTGAAATTTGTAAACCGTAGTCTCAATTAGCGACTAAAGAATCTTTTATTTGTGTTCAGAGAATAATGAGTGCCCAACACTCCATACCCGACTATGTACGAATCACACCAGATTGCAAGAACCTCCTTTCGCGAATCTTTGTTGCAAATCCCTCTAAGGTAAAACTCATGACTTAACTATGGTTTGTTCATTAACATAACATGTATATTGTTGACTTCAATTTGTGCATTAAACGTTCGAATTCCCTATTCAGAGGATAACTATTCCTGAGATAAAGAAACATCCTTGGTTCTTAAAGAATCTGCCAAAAGAGCTGATGGATGTTGAGCACACGAAATTCGAAGAAGCTTCAGAGCAACTACAACAAAGTGTGGAAGAAATCATGAAGATGATACAAGAAGCTAAAATACCTGGAGTAGTGTCAAAATCTGAAGGGAAAGATCATGCAGGGACAACAGAACAAGATGATTTAGAGGAAGACCTCGAATCGGAAATCGACAGCAGCAATGACTTTGCTGTTTATGTCTGAGGATGATATTTTATGATCAATTGGACTTATAAGAGTAAATGTTTAATTTTTATCTCCTATGAATTGTCTATTATGTTGTACAGAAGAGTTATGCACAGAGCTATTTACTGAGTTCTAAGTGTGCATTTCAAATTTGTTAGATGGATGTAATGTTTGCTACAAACAGATTATGTAACTTTTGAAGTTTTAACCATTTGGTATCTGTCTAATTTGGATTCACGTTGTGTAAGCCCCAAGTGGGGGTAAAGTGGTCTCAATTGTAATGTTCTACCTTTTCAGGGCTAGAAGTTGAAACCTCTTTAGCTTAAATGAAGAGATTATACCATCACATCACAAGTTGTAGTTAGTGTTGGAGTTCGAATTTTCGCTAAGATCGATGAGTTACTTTATGTTAAATGTTAGTTTTCATTCATATATATTAAATTTTGTACACTTTTAGTGAAATTCGTGTCTATAGAAAAGCAAGGCTAAAATGTTCAAGATCGAATGATCTTCAATTAATTCATGCTAATAATGTATGTTGTTATGTGTTCGAATAGTGAAATTAATTATTAATATTTGTATCTGAGTAAACAACCTATATTAAGTGCATGATTCTTCTCTAAACTCGTAAATATGAAATACTTTGTGTACCAGACTGCCTTTAATTTTTGTAATAATGCATACCTAACTTAACAATTTGAAAATAACTTCTTTCATTTTCTAGTCTATTATTTCCAAACTAATTGGCTTCATCGTTAATTCTTATAGTTTATATTAATGGACTAGAAAACAAGTTAATTTATGATCTCAAACTAACTGACTTCATCGTTAATTCTTATTTTATAACATAATATACATGAAATCCTAGCCGCCATAAAATAAAGATTGCCACCAACACAATAAATTGCAATTTATTGAAGGATTAAAAAGAATAATATTGATATCCTCTATTATTGGTGTAAGTACAACTTTACAACCTAAACCTATTTAAAATCAAAATATTATGTATTAAAATAAT

>PGSC0003DMG400016156

GTCAACCCTAATTTAAGGTCCAGTTGAGTCCTTTATGAAAATAAATAAATTCAAATTGATTAAGACCATTTGCTAAATAATCCATATAAACTAAGTAAAACCATATAATATAAGCTTAGAAAAATCATGTTGGCTTCAGAATTTCAAGATTTTAGTTCACAATTTTGCAAAGAATTTACAATATTTGTATCACTAATGTAGCTCCGCGGTCACACAAGGGGGAATTCAAAATTTAAAATTTGAAAATATGGCTTTTAAGATTTTTAATATTGAATTCATATTTTAAAATTATGGATTCAGACTAACTCTTTATTGCAATATTAATGATTATATATTGATTTTCTGCTATACCATTAATATTAAAGTGTTATTGGAAAAAAAGAAAGAAGAATCTCATTCGTTTCATCGCATCACTTTTTTTGTATGGGCGTAGTTAAAGGCAGAAGGGGTTCATCTTGATGAATAGAAAGTCTCACATACGTGAATGAATAGAATTTTGGGATCCTTATAACGGTTCAGATATCTCTTCCCTTTGAGCTAATTTTTGGGACGTAAGTTAGGAACCAAACCAAATTTAACATGGTATCAGAGTCCCGATGTAGACTAGAAAATCGGACTATGTATGGAATGTCGCACATTGAAATCATGACCTCTTTATAAAACTTGGACAATCTTACATCTGGACCTTCTTCGTCAGAAAATTACATTCCTTTTACTAAAAATCCTGAGCCATCATTGATCTTTTGTCATTTTTTTAATGTTAGTTTTCTCTTTTTAGTTTCAGGCATTTAAATATACCACGTTGGTAGATTCAACATATCTTAATTATACTAAAATTTCAATCCATTGGAACAGTGCCACGTGTCTACCATTCTCTAGTGTATGTTCTTGTCAAGTAGGACTTGATCAACAGTTCACTTTAGTTATTATAAATAGTGCCTTACATGTATTATGATTTTTTGTTTTCAAATACTCAAATTATACATTTTTTATTAATATAATGGAAAGGTATGAAATTTTGAAAGATATTGGTTCTGGTAATTTTGGTGTAGCAAAGTTAGTCAAAGATAAGTTGACAAATGAGCTTTATGCTGTCAAGTATATTGAGAGAGGCAAAAAGGTTTGTGTTGCTTCATCTTTTTCCTTTTCGGATTTCGGTATCTACTTTAGAGGTCGAGGATTAGGGTAGAAGGTTCGTAGGTAGCTGAATGTTGTTGCTCGTTGCGTGGGTAGTTATCGTGTAGTTTCTTATCCTTTAATGTGTATTACTATTTGTTGCTTTGGTTGTTTTGTGTTTTCATATTTTACCGTCTTATTTTCTTACAATCCCACATAGAATACTTTTATTTTTGAGGTGACGGTCTATCAAAAATAGCTCCACTCTATAAAGGTAGGGTAAAGGTTTAGGTGTATTCTACCTTCCACAGACATCACTTGTGGATTAAACTGGATATGTTGTTGTTGTTGGAGTTCGGTATCCACTTTATGGGGCTCTAACTAAGCCTGATTCGTGTTGTGTAAGGCCTATTAAAAGGGAAAGCGCTTTCTACCAGGATTTTTTTTTCATATCTAGAACTCGAAACTTTTTGAGTAATTCATAATTCATGTGGTAGTTATGAAGTATGTAAACTTGTTCTTGTTAATTGACAAGATTAATTTTTGCTATTTTTTCTTGATTTATTTTGGTGTTGTATGTGTGCAGATTGATGAGCATGTTCAGAGAGAAATCATGAATCATAGGTCCTTGAAGCATCCCAATATCATTAGATTCAAGGAGGTAATTAATTAATGATGTATTAATTACCTATGTCAACGTCTGGTCTAAAAGCTTAAACTAAAAAAGAGAGCGCACCACACATTTATTCACTTAATTATGTTTTCAACACGTTCTCTCACGTTTGATGGACTAAAGTTTTAAAGCATGTTGTTCATTATTGTGTAGGTATTTCTTACCCCAACTCATCTAGCCATAGTGATGGAATATGCTGCTGGTGGAGAGCTTTTTGAAAGAATATGCAATGCTGGTAGATTCAGTGAAGATGAGGTAACACACAAAAAAAAAAACTGTCGCTCGGATTATCCAAAAATGTTGCCCCACTTGTGTCGTAAAACTGCGCTACTTTTGGAAGATCTCACACGCACCCTGTAGCATTTTTGAAGAGTCTGAGCAAAACATAGATAAGCACTCAAATTAATTCCTCTTCTTCTTGGAAATTTGAGATACGCGCGTCTATTTTTAAATTTTGATCCATCTTTTAGAAGTTCGAATACATATGTGATTATATCAGCACTAACGCATCGAATCTCATCAAACCTCTGAAGTTAAGCATGCTTGAGCCAGAGTAGTACTGAGATGGGAGACTCCTTAGGAATTAAGTTCTCCTGTTACATCCCTCCTTTTATCGAAACTAAGCTTAATTTTGTCTATTTAATTATAATTTTTTGCTTAAAAGGCCCAAATTGTTTTATCAAATATATAATTCAATTTTAGTGTCATTGTATTTTGAGCTCTTTTTTTCATGTTATCTGTCCTGTTTTTTGTTATATTTTTCAGGCAAGATTTTTCTTTCAACAACTTATATCAGGAGTCAGTTACTGTCACTCAATGGTAAAATTGAGACCTATTACTTCATTTGAATTAATTTATTCTTTATTAACTCTTATATGATGCTTGGAGTGCCGTCCTTTTTTGAACTCTGCTAATGCGGGATGCTTCCATACTCATGTTCTATGACATGCATTGTGATGTCCAAATATTTTTTTGAATGCAGCAAATTTGTCATAGAGATCTCAAGCTCGAAAACACTCTCTTAGATGATAGCTCAAAACCGCGTCTTAAAATATGCGATTTTGGCTACTCCAAGGCAATGATCTTGAACTCTACTCTTATGTTGAAATTGGATCATTTTTTCATCAAAATAGTTTCTCATTGTTGTATGTATAATTCATTGTGTAGTCATCAGTCTTGCATTCTCAACCTAAATCTACTGTTGGAACTCCGGCCTATATCGCCCCAGAAGTCTTATTGAGAAAAGAGTACGATGGGAAGGTACAATTATAACGATTTGAGTAATAGTTTTGATCGTATATTGTCAGTATATATAACTTAAATATGTTCATCAATTATTTGTTTGACTTGAACTTCATGGTTTAAATGTCATTATTTTGGATGTAAAAGCTAGCAGATGTTTGGTCTTGTGGGGTAACATTATATGTGATGCTTGTTGGTGCTTATCCTTTTGAAGATCCTGAAGATCCAAGAAATTTCAGGAAAACTTTAACAGTAAGTTATGATTCATTTTTATAAAAAAAAAACGCGTTAATTTCACGTATGGTCATTGAACGTTACTTAGTATAACATACGTGAAATTTTGGTTTATGACAGAGGATATTAAGCGTTCAGTATTCAATCCCTTATTACGTTCGAGTTTCAAAGGAGTGTAAGCTTCTTTTATCTCAAATATTTGTAGCTGACCCTAGCAAGGTTTGTAGTTGTATTTTCACTTCAAATTTGTACATTTTTTTTGTTGTTTTTGGAGGAAAAAATTATTGAAAACACTCCTAAAGTAGGCGTGAATTATTAGTTTCATCTCCGAACTATTTGACAGCCTTAAAAACACCTCTTCACTTGATTAACTGAACTTAAATACATTCCCGATCTTGCAATATGAGTGAATTACATCCTTAAATTCTTGTCAAGTTTAGAGGTATTTTCAACACTTCTGTCGGCTTTTTATTAAGATCTCCATGCCCTTACAAGGGTTTGAGACCACTTGCTATGTCATGTGGCAGAGCGGGGCTGATCAAGGGTGTGTCTAAGTTTAGTTAGTCAAGTAAAGGGGTGTTTTAAGGCTGTTAATAGTTCAAGAATGAAACTAATGATTTGCATCAAGTTTAGAAGTATTTTCAACAATTCTCTCTTGTTTTTTGTTTTGTTGATAAACAACTAATTGTGTTGTATGCTTTTTGTATATGTAGAGAATAACTATTGAAGAGATTAAAAAACATCCTTGGTTTCTAAAGGATTTGCCTATAGAATATATGGAAGGGGAGGATGCAAGTTTACAAATGAAAGAAGAAAATGAACCAACTCAAAGTATTGATGAAGTGTTAGCTATAATTCAAGAGGCAAAAAAACCAGGAGAAGGGCCCAAAGGTTGTGATTTATTGGTCAAGGGAATAAGTAGTAGCATTGATCTTGGTGATGATGATGATTCAGATATTGATGATGATACTGATGATGATATAGAGACAAGTGGGGAATTTGTTTGTGCTTTATGAGTGTTGCATGTGTATAATAGACTCAATGTGATCATACTCATGTGTGGAGATTGATGTAGATTATAAGATAGAGATGAGCGCATGCTTTTTTTTTATCTTTGTATTATGAGTGTTACATGTGTAGATTTGATGTGGTTCGATTGTTTCTTGAATTTTATTGTGTATAATGAAAGCTTTAGTGTGTGCATTAAACGTTCTTTATGATGAGTGTTACTTTACATTGTGCCATGAAGGTCAAAGTCAAAACTATGATGATGATCATTCTTTAGCCATGAAAATGATATTTTTTCCTATGGTTGTTTAACCATGATTAAGTGATATCAGATATGTATTTTCACTTCACTTCGAAATTATGTATAAGATAAAATTTCTTGATTCGGTGAAAACGCCTGTTATCGATAAATATTTACCCTTTTTAGCATAGTGGTTGTAAGTTTTACTCTATGGCTTTTTGCCTTTTACTTAATCTCATATATATTATGTTTATGAAATTCTCTAATTTTGGTAAAGATTATACCATGTAGAAATATTCAATCATGTATGAACCTTTTGATTCTATATAACATTCTTGTAATTGTATATAAACACTACAAGTTGTACTTATGTGATGAACCACAAGTGCATTAGACCAATAAAATGCATAAAGATTTTGTTGTTAATGTATGATAATTCATATATCTGATCCTAACTTATATTCAACGAAACACAATTGTTATTATTCAAATAAATCGATCAACTAAATCGAGAAAATTTTAACCTTACAATAAGAGTATATATACTATTATACCTATATGTCACTTGAACACAATTGCTTGCTCTATGTTCACATCCATTATCTAATGATTATTTCGAATTAGTAACTTTCTTTTTTTTGATTGGTTACAAGATTTCCAGTGTATATATTTTTGAAAAAAAACAGGATAATGTTTTTGTGCTGCTTCTATTAATTCTTATGTGTCCTCCCAATAATTGATATGTGTGTCCCACTTATATATAAGTTAGTAACAATTTTGTGCTATAATAGTTGTCTTTAATTATTGATTCTCTGGCTTGCTAGATCTTGAAGTTTCCCATAATAATGTTAATGGATAGGGCTGACAATATAGAACAAGTAACCATCTAATCCTACCTATTAAATGCATATAATACATCATCTTAAAAATATTTTTAGCGACAATTAATAAATAAAAATAATTAAATATATTCTTTTAGAGGCAGTTAACACTCTTTATAAATGTCCCTAAAATATATAACAATATTGGATCTAATAGCAATTAACTAATGTTGGTAAAGACTTTAGCACT

>PGSC0003DMG400017763

TTAAAAGTGGCAATATATGTAATTGTTTGAAAGTAGAGATAATATTAGTATATATGGTATGGATGTATGTCTAATTAGGTAGTTTTCCCTATTAAAATATATTCAATTTAGTTTAGGACAGGTACAAAGGTGACAAAAATTGGGCCAAAGGCCCAAAACGAAAATAGGCCCAAGTGCTGGCCCAGGCGAACAGATCCAGACTTGGGCCCATATTTTCTTTCCCTATTTACTTGATTGATGTTATGTGCTTATTTGTTAAAAATTAATATTAAGGTTTCAAAACTTCAAATCCCCAAAAGACACCCATTTTAAATTAATTACTTAACTAAATTATTCATTTTTACTTTGACTTAAAAATAAATAAATAAATAAGCATACAAAATACTTCACTTAAATAACATTGCAATATTTTTCAATTTCTCCCTTGAAATGGTCCTAATTATAAAATAATCCAATTTTGTGATTCAAGCTAAATTAAAATTATTTTAGCCAAATAATTAATTGTCGGATAACCGCATTAGCGGATATTCTAGATGCCTTAAAAACCTTCCTAGAATATTAATAGGAACCTCGAACCCCTCTTTAAATATTTTCAATCGATTTCCTGTTTTTATCGTTTGAAAAAATTAGTTTTCTTGATTTTTTCTTAAAAATTAAGTGGCGACTCTTAAATCAGTCCAAAATTATATTTTCCTAATAAAACAATATTTATGTATTATTTAAAGTCTTTATTTGATTTTCTACTTTAGACATAGACATCCTAACTTTTTATCATTACGTGGCTAATTGTCAATTTCCACGCTGATATTAGTGAAAACTTTAGCTGCTCCCAAAAACACGTTTTCTTGTCTTCTTTTGAGTCAGTGGTAAGTCTCAATTTTTCCAAATTATTTTTAGTATGAAATTGCAAGTCAAAGGAAAAAAATTTGCTTCTTTTATGAATTGTGATTTCTGAAAAATCTTGAAATCCCTCTTTTTCTGTGTTTTTTTTTTTGTTATAATGATAGAGGGTTATGAGTTTGTGAAGGATTTGGGTTGTGGTAATTTTGGAGTAGCTAAGCTTGTAAGAGATTATAAGACTAAAGAGCTCTTTGCTGTCAAGTTCTTTGAAAGAGGCCAAAAGGTCAGCAAAACTTTAATAATTTTAGTCATTTGTTTGTTTTTGGATAGTGATTTTATGTGATATTTCAGATTGATGAACATGTACAAAGGGAAATTATGAATCATAGATCATTGAGTCATCCAAATATAATCAGATTCAAAGAGGTAACTCTTGCTAATATTTTACTCTTCAATTCCTTATTAGCTAAGTAACTGCTTTTACGGTTTCAATTTGTTTGTTTTGGTTTTTTAGTCCTTTCGGTCCGTTTAAAAAAAAAATTTCTTTTTAGCAACTTTTTGAAATGTTTAAGACATAGATTAAAAGGTATTTTGCTAGTACTTTTTTAAACTTCATATCAAGTTAACAAGGACAATCAAATTGAAATGAGGGAATATATTGTTGATTCCTCTGGGAATTCTAGATACATATACTCTGTTGCTACGAGAGTGGCTTTCTTTTTTTTACCCGTCCCTAAGAGTTCTCTCTTGTTTTGACTCGAACCCACACCCTTACTATTGGATAGAGGCTGCTTAACATCCGAGCAATGTCCTACGAAAGTGGCTTGATTAAGGTACTTGTAGTTTTAAAGTTGTCTAACCATGTAATCATATTTTTTTCTTTTTGGTCATAGCAATCTTATCTTCCTTCTTTCTTTCTTTCCCTTTTTTGTGTGTGCTGTATCTCAGCCAGTTCCCAAGAAATGTCATTGTTGTTCTTGTTTTTATTCTTATGTTGCAGTTTGTGTGACACATTTTTCTTTGTAGTCCGTTCCAAAAAAAATGACAGGCTAGTGGAGTTGAAAGCAATTAACTTTGCACTTTCTATTTGCCTGTAATGAGAAAGTTGTATAACCACATAAATATTATGACATATTTGAGACGGTAAGTTTCGAAAGTTTTATGGTCACACAAATGGTAGGAGTATGACAAGTTTAAGACCACTAGATTAAAAAATCTCCATTTCAGTCTTAAAATCCCCTGTCGATTTAAACTATGTCACATAAATTGAAACAGATGGAGTAATTATTTTTAGGGGTTAATACTGAGTTGTACGTTAGTTCTGCCTCTGCTTTGAAGGACTCTGCATCTTGCAATTACCAAAATGCAACATTTTGATTCTTATGGAAATGAATTTATCGATCCTTTTGATTTATTTTGTGCTGGGATTCCTTATGTCTTCTAACAGTAGGTTCAACGAATTCGAGTGAAATGAAGTACAACATGTAGTTAAGCAGCATGTACGCATGATTTCTATTATGTTTATGGCTTTTTGAATTAATGGACTCACCTCGACCAGGTTTTGCTGACGCCTACTCATCTAGCAATAGTAATGGAGTATGCGGCAGGAGGAGAACTCTTTCAGAGGATTTGTAAAGCTGGAAGATTTAACGAAAACGAGGTAATAAACGATAATTAAAGCGTGATTCTTCCCGCTTATGACTTGGCTAGATCAAAATTTTCATTTTACAGATTTCTATTGATTATTATTTACTTCTGACAAACAACTCAGTGTGTGTTGTGTGTGCGTGTGTGGGGGATTGTCATTCTTCTTATTTACCTGTCTTCATTGCACATCCACATCCCTTTTCGTATTGATACTCCATAGAGTACTACGTGTTGACGTAGTTATAGAGGCAGACTTAAATGCTCTTCACTGGAAATATGGATTACAAACTTCCGAGTATTAATGACATATCGAATGTTGAAACCAAAACTTGTTATGCCTTGAAAATTCTCATGTTTCGAGTTCATTATTCACGATGTTTGTATGCGTGTGTGCATGTGGACATTTTAATTTGCTTATTTATTTTGTTTTTGGTCAGGCAAGATTCTTCTTTCAACAATTGATATCAGGGGTTAGCTACTGCCATTTCATGGTAAAACATGGATATTAATTTCTCACACTTTCAAGTTTCGTTTCGTTTTTATTTTTCTTAGACCTGTTATGTTAATTGCTCTTTGGTTGCAGCAAATCTGTCATAGAGATCTCAAATTGGAAAATACGTTACTAGACGGAAGTACTGCACCTCGCGTAAAAATATGTGATTTTGGTTACTCCAAGGTCAATATAATCTTTTGTCATACCTTAATTCTAACTACAATTTCAGATTTTCGTTGAGCTCGTGTAACAGACTTCACTTGTCTGCAGTCATCTGTCTTTCATTCTCAACCAAAGTCCACTGTAGGGACACCAGCTTACGTTGCACCGGAGATCTTATCAAAGAAAGAATATGACGGGAAGGTACTAGTCATTTCATGTTCTCGTGTCAGCAGTTTCATAATGCTTGTATCATTTAACCGTTTTTTCGTTCTTGTTAATTATTGAGTTCATAGGTTGCAGATGTTTGGTCTTGTGGAGTCACTTTATACGTAATGCTGGTCGGAGCTTATCCATTTGAAGATCCCACTGATCCAAAAAACATCAGAAAGACCATATCTGTGAGTATATGAAAAAAAAATACATTTCTGTTATATACATAAAGTCGAACTTGATCGACTTATAGAAAAAGGAAAAAGAAACTGCTCCGATTTGAATGTCGTAACGTCGATTGAATGCACCATTCTGATTGAGTTTAATCCTCCGTAACAGAGAATATTTAGCGTCCAGTACTCAATTCCACAAAATGTCCGAATTTCTGTGGAGTGCCAGCATCTCTTATCCCGGATTTTTGTAGCAGACCCTGAAAAGGTAAATATTAATATACTCTTCTGCCAAAAAAAGCATTATATCATCATAAATCCAGTATGTGAAATGTTGTTTGGGTGAGTGACACGATCAGTCGGTCACGAATGACATATTTACCTCATCATATCAGCAGAGACAGATCTAGGATTTGAAATTTTTGATTTCTTTCAACGACATCAAGTTAATATAGTGAGTTCACAATCATATATTTATAGATATTTAGCGAATTCAGGCAAAAGTTACTGGGCTCACGTGAACCCACATATTACATTGTAAATACTCCCCTGCATCTTAGTATTTCCTATCGAAAATCACAGTCGTCCTAGGCATTTCAAGTTTTCATTTCACTACATTATACTTGGAAGGAAGGAGTGGAGGTCACGTATTAGGGTAGAAACTTAGTAGGGGTAGAGTGTTGTCTTGCCTTGCGAAGGTTCCTTGTAGTTATTGTCATATGTTGTTTATTGTGTTTTGATAATCACACTATTTGCTACTGTTACTGTTCTTCCTTATAAACATTGGACTACTTTTCTTGTTAACTGCTATGCTTCTTCATTGTTTTCATCTTTTGTGCTCGAGTTTGATGTACCTAAGCTGAGGATCAATCGGAAACAACCTCTCTAACTTCACGAGGTAGTGGTAAGATATGCGTACACTCTACCCTCCCCAAACCCCACTTAGTGGGATTTCACTGGGTATGTTGTATGCTTGGACGTGCCTCCATGTGTAATTTTTTTGAGTCCTAAGCTAGAACCTGTGTGTTCGGAATAGTCTAATCTTATTAGTGGCTTACTGGTTCTTTTGCAGAGAATAACCATCCCAGAAATTAAGAACCATCCTTGGTTTTTGAAGAACTTGCCTGTCGAATTCATGGAAGAAGGACGGAACGAGTGCATCGGTGTAAATAACCCGAGACAGAGCATGGAAGAAGTATTGGCAGTAATACAGGAAGCAAGAATTGCTTTGCAGGTTCTAGCAAGTTCATCTCAAGGCATTATGGAAGAATTTGATGAATTAGATGATGCTGATATAGAAGATATTGAAACAAGTGGTGACTTTCTTTGTTATTTGTGATGTTTAATTTGTGTCAGATATTTTGTAATATATAGAGGTTTTAGATTGAACAAACACATTTGTTCATTAAAGAACATGACTATTATGAAATGTCATTTGTTCATGAAAGAAAATGATTATTATGCAAAGTCATCTCTTAACTTCTGGATTCTTTATGAGTAGCCTAAGAATAAAATTTGCACATATATACAAGGGTTTTGTTGTATCTTGAAGGGAATTGCTTGTATTTTCACTAATATGTATACATACAATATCTCTTTAAGGAAAATGATTCTTCACGCCTCAAAAGTCATCTTCTTTTTCAATTATTTTTCTAACAATCTTAAAGAGATATTGGGTTTGAAGAAGATAGAAACAACTATGACAATCCAAAAATGGTGTTGCACTCCGAGAAGTAAGGATTTCCATTTTTAAGGAAAAATATTGATGATGATAACCCTAAATTAAGAAATAATACCAACGATTTTGATATCTGAGATGGCAAAAGTGATCACAAGAGCAATGGATCAAGAAGGAAGCGATCAAAATCGGGTTCTACTATCTTAAGTTTGCGTATTAATTAATTATTTTATCCTTTGAGATTTTGAGAAGTTATTTTCATAAGTATTAGGATAAATAATTCACTTGTGATGATCTTGAAATACATTTTAAATAAGTTTAGGATTGACATATTTGGTTTGCTTATTATAAATTTGCTATTTGGGACAAAGAAAAATATTATAGCATAATTGTGTTGCATAAAGATCATGTAAATTTTAATTTCAAGTTATGAAATAGTATATGAATTTAGTTATTTTGCTAGATAATATCTATACGTGAAAGTTATTAGAATAATTTAAAATTAAGAGAAATGATAATTTTTATTAGATCATGCCTTTAGTAAAGGTTGAAGACTGTCTTCTTATTAAATGCTCGTGTATTATAGTATTGGAACACAATAAACTTGACAACAAGATGATCTACTGACGTTAATTATATGTCCGCACAAGTTACCAAAAAG

>PGSC0003DMG400010356

ATGAGATGGGAGGGAGAATATATATATAATATGAGGGTCGATTCACATGAGAACTGTTTTGATTCGCCTCAAATTATTCATAGCAGTAAGTGAAAAATGATTCCCCAAATTAGCCTTGTCACAGTGAGTGTGATATCATTGCACCTTTTCAACTACGATAGCAACTGTTGTAGTGACAAAATTGACAGACACTAATCAACTAACATTATGGGAAATATCTATAATGTTCCTCACTAGAAACCAATGCCTTCATCCATGGTTGATTTACTTATAACTTTGTATATACTCATTCACTTTTACTTATCACATTATGCTCATTAAAAAAATAATGATCGATATGACTATCTTGCCATACTACCCCTATTAATTGATGTTTAGTTTAAGTCAAAAAATAAGTAATTAATGTTAAGGACAAAACACTAGAAAAAAAAATAACTGTCTTTTCTTGATATGTTAAAATTGACAAACAAAAGTGAAAATCTAATTTTGAAATAGTGGACAAGTAAAAGTGATCGGAGAGAGTATCTGAATCTGTGCTATTCTTTTGCATATTCTTGTATTGAAGTTCATCAAAAAGGTTTAAAAGAAAGAGAGGAAGTTGGAGTAAAGGATCCCATAATAATGATAGAAGAAACACAAGAAAAGGATCATATTCAAGTAAGGGACACTTGACATCAGAGGAAGGTACATCCTTAAACATTGTTCACAAAATTTCAGGTCTTCCTTTTTCATCTCCGATTACTCGATACATATAATTTCTACATGCAAAACACGATCGAGAATAAATATCATTTCACCAATTCTTCATTGATCAAATACTTGAAACATTTGCTAGGCAGGAATGATGTCTATAAATCATATCAAGGCTGCCTGGATTGCCTATAATGTGCAATCTTTTTGCATTCTAAGCCTCTTAATCCAAGCTCTATTGCTTCTTTTAGCACCATTCAGGAAGAGAACAGGCAGTAAAATCCTGATGGTGTTTGTTTGGTCAGCATACATGGCTGCTGATTGGGCCCCTGCTTTCATTCTCAATCTCATTGTAAATTACAGCAAAACAAGACATGACAAGACAGAACTTATGTCATTTTGGGCTTCTTGTATGTTGTTGCATTTAGGAGGTTCTGATCATGCAATAGCATTTTCCCTCGAGGACAATGACCTGTGGTTTAGGTATGCTTTCACGTTTGTTTTCATGTTCCTGTCCTCTGCCTACGTGTTCTACTTATCGTTCTCCCAAGTAAACTATCTTTGGATCCCAAATGTCCTAGTGTTCCTGGCTGGAATCATCAAATGTTACGAGAGGGCACGTGCTAGGTTCATTGGGAGTATGGATAAGTCCATAACATTGAGTAATCCTAAGTACGAAGATGTTGTGAAGAGGAAGTCGTCAGAAGAACCTCACAATTTCCGAGCAGGAGAGCTGGATGATGTGGAGGTTATACAACTTGCATATACAATATTCAGGGCCTATAAAGGTGTTCTGGTGGAGCACAAGTTCACTTTTGAGGAGTATGGTAGAATTCAACAACTCTTGCAAAACAGAACTGATCTGTTTGCTTTTAGAATCGCGCAGGCTGAACTCGAGATTCTACATGATGTTCTATACACTAAGGCCCAAATTTTCCATGAAAGTTTTGGGAACTCAGCTCGATCTGTCTATTGGGTTCTGCTTCCTGCAGCATTGGCCTCTTTCTCTTTGTTGGTGAGGAACAACAAGCAAAAGCAATTTGATCCGTTCGATGTAGGACTAACCTATAGCTTACTCATTGGAGGTATGTTTTTGGATGCCATTGCTTTTGTTATCTTGCTTTATTCCTCAACTTTCACAATTGCCACAATGTCAAGGGCAACGTCGAGGCCAATCACTTGGGTGGTCAAATTCCTCAAAGCTTTAAAGTGGCCACGGCCACGTGCTCTATGGTGTTGGAGACCATCTCTCCAACAATTCAACCTCATAACCTATGGTTTAAATCGACCTCCAAAAGCATGGGAATGCATAATTGATTCCCTACACCTTACAAATTATCTTGATGAGATGATATATGTGAAAAACAAGAGTCTTTCTCCTATATTGGAAGCTGAAATTCTAAGACAAGTAGAAATACGTCTAAATAGGCATGATTGGGATTGTAACTCGTATGCTTCTACATTCACTAAATGCACCAGCAAGTTCCCTGTGTCGAAGGAGCTTGACAAGAACGAGTTCCTCATAGTATGGCACATTGTGACGGAGATTTTCTATAATGTTGTGGATGTACCTGAGGAAAATATTGGACCTGATTCAAGAGAGTTGAACAAGTTTAGAGAATGTTCAAAGTCTCTATCAGACTACATGATTTACATTCTTATTTTTCGCCCTTTCTTCATGCCTGTTCCTAACAAGAAGAAGATAGAAGAGACCAACAACAAGATAAAAGAATTGCTTCATGAAAAGAAAAATTCAAGCCAACAAGAAGCTTGCCAAGAGATCATGAAAAAAGCTGCTGAGGGGAGAATTCCTCAGGAATCGTTCTTGTATGAAGCTTTTAAGGTTGTCAACTGTTTGCAAACTGAATCTCCTTGGTGTCAAGACAGAGTTAAATGTTGGGAGATTATTCATAAAACTTGGATCGCGATATTATTTGATGCTGCCAAATGCTCTGCACCGCGTGCTCATGCACAATATCTTGTGAGAGGTGGTGAAATTTTATCCTTAATCTGGTTGTTGGGTGCTAACTGTGGATCCATGGCTCAGTTTTCATTTTTTGAAGATTCTGATGAGGAAAATCAAGCTAGTAATATGGCTAGGCTCGAATCGATATATTACTAAAGTTTAGGAATGTAACATATCATGTGAAGAAGAGCATCATACCAGTTGGTCTTAGGATATGGTATCTTTTGTAGTGTTGAAACTTTGTTGTTGTTATGAATAAATTGAGCTAATGGATGTTCATTGAGTACTTTTTTTGAAACATTTGAGTACTTTTACATATTATTTATACCATGAACCATGTAATTTTACATGGACGATGTACTTAATTCTTGTGAATTCTGGGGGAATTTTGTGTCATATAGAGTTCCTTATGTTAAAAGCTTACCCACACCTAGTGTTTATTACACCAAACCATACTAATTTACATGGTCAAGTCATATATTAAGGTGATAATATGTATAATCAGTTAAATTTTTACTGTATTCCTACCCTACGAGAAACATAATTGAATGAAATTATCTAATAGTAACAATCTCCACACTTCTTTTTTCCATCTAAGCATCTCTAATTTTTCTTGTTTTACAAGCTACTAGTATGAGTCTCTAGTCAACTTCAAGTAGAGGTTCATGTTTCTCGATAACATGTTATCAATACGAGTCTCTACTCAACTTCAAATAGAGATTTATATTTTTTTGGTGACTTTTGTATTATAGAAGATGTTAGCAATAGGATTTCCACGCCACAGATGGGAGGTTCTTAAGCCAAAAACAGACACAAGAATATATGCTTTCTTTTAAAAATTCTAACTTGGTTACCACTCAGAGCAGCAGCATATATCTGGTTTTAAGATAGATTACTCTTGACTTTGAGAATTTTCTTTGCAAAAAATATCATCATTTGTAAGCTTATCAAGGCAATTGATTTCTACATGAATTGTGCAATACTTCCCAAAAGAGCAAAACCAAAGAACAACAGCAGTAGCAGCAACAATATTAGTCGTCTTCCGCGTAAATTGGAAGAAATCTTACAATATGCAGAGCCTGGTGTGGAAGTACTTTTGGATGGACAAATCAGGGTATATGTATATCCCCAATTACAAAACTATCCATGTTTTATA

>PGSC0003DMG400013240

TTAACCATGTACACGTTTTGTTTCCCCACTTGCATTTACACGTTTTGTTTCCCATATAAAAGTTGTAGCTTTATGTTGTTTCTCTAAGCTAATTTGTGTGGTAGGACGTATCGTAAATAGTCTCTCTACCTTATAAAGATAGTGGTAAGGTCTGCGTACACTCACCCTGTATAATGTTGTTGTTGTTGATTTTTCATAGTCAATGTTTCTATTAACTTTTTGTTTTTGCTTGCCTTTTTCTTATCATCAACATAAGGTCCATTACATATATTTGCTGATAAATTGTGGAGCAAGAAGTGATTTTTCTGTATATTTCTAGCATTAAGGTCAGTAAAACTGATTAAGTTTCACTTTTATTTTCCTTAGTTTTTGTTATTCTTATTTTTGTTCATGGCATTGTTCATTAAAAGCAACCTTATGTTGTATATGTATTCATTTGCCATTTGCATGGATTTTTTTATATATAAATTTATGCTATGTATTGAAAGTACTAAGTTCAGATGAACTCGACAGGGTGTGCTACATCCGCCTTTCTATATATAAAGAAATCCGCTAAATATGTATAAATATTTGATTATGAACACAGTTATTGTTGTAGAGTGTAGACTAACTTGAGTTCGGCGTGGCTGGAGGAATCCATAAACTTCAACTACTGGATCGCCCTTTGCTTATGATTAATTGTTGTTTCCTTTGTTTTTTTTTTATCTGATTTCTTCATGATATCTCTGGAGAACTTCTTTGTTCTCTTGAAGTTTAATCATACTATATAGTCACATATAAAAAGGACAGTCTGGTGCACAAAGCACATGCACATCCCAGGTTAGAAGGGTCTGAGGAAGGCCGCTTTTTGACTAACTTCAAAGTTTGGGTTCAAACATAATAAGTTTCTGTTCTGTTTGAGTTAGATATCTTTCCATGTGTCTCTGGGATTCTGTTTTTCTGTGAATTTAGTGTGTTGCTGTCTGTTGTTTTCCTGCAGAATTCAGTTCCTCGGGGAAAGATGAAGATCGATAAGTTCATAGATAACATCAAACTTCTGTTTCACCAATGGGAATTGGGATATTCCATTATGTTTAGCATAACATTGCAGGCTTTTCTTATATTTTGGACATACAAAAGAAGGAATTTAGTTGGAACCGTGAGAAAGACGTTGTGGCTTGGGATTTTCCTTGCTGCTGATTTCGTTGCAACGTTGTGTCTAGGAGCCATCTTTCACTTGCCTTTGGGACCGAGTGTGTCATCATCACCTGATGATCATAACAAAAAAATCAAGAACCTTTTGATTATCTGGTCACCCTTCATCCTACTACACTTGGGCAGGCACAGTACACTTGTAGGAATTTCCTTGGCAGATAATGAATTCTGGCTGAGCCATTTCTTTAAATTTTTATACTATGAGTTCATAGCTCTGACGATAAGTATTCTGGGATCCTCGACAAGATGGTTCACTTTGGCATCGCGCATCATGTTGGTTGGAGGCTCTTTAAAGTTTGCTGAAAGAGTTTTGGCGCATAAAAAAGGAAGCATGGATGAGCTCCGTAGATCCATTTTCAAGGAAAATGGCCCCGGTGGCCTTGGCATTTTGGAAATCAATCAAATATTAGATCGTCTCATTAGCGCACATAGACAAGGCCATCTTCAAGAACAATTTCACAATGAAGAAAATGATCATCTAGCCGATGATGCTAACAATGAAGCTGATGTTCTTCCTGTGGCCTCCAGGGAAGCAAATGTAGTAGCCCTGTGTGAAGCTTATCATGCTTTCAACAAGCACAAAGACCTGTATGTTGAAAGTAGGTCGTACGAAGATTGCTACAGGATTAGAGGTGGGCGTTGGCGCGGAGAAACTTTTAACCTCGTTGATGCTGCTACTGCTTTCAACAACATAAAGATGGAACTATGTTTTGCATATGACCATTTCTACACAAAAATGGCATTCTTGCATACTTGGTGGGGCTGGATTGCCCGATTTGTAAGCTTACTTTCAGTATTTATCGCGCTTATCCTGTTTTCTGTTGGAGTTTTCACCAACGATGAATTTAACCGAGCAGACAACTTTGACAAAATTGTCACCTTTCTCCTGATAATTATGGCATTCCTCCTTGAGGCAGGGAGTTTGATTGAGTCCATACTGTCGAAACGCTTTTTGGTGCAGATAGGGATCCGTAAGGGGAAAATGTGGCGTCTTTTGGAAGGATTTATTGTAAGGCTTCCAGGTAGATGTATAATCACCCGAGACAAAAATCCTACCTTAAAGATAGGACAGTTCAGCTTACTTCCTTACTGCTTTAATGATTCCAAGCAGAATGCCCCTTTTAAAAAGTTCTTAGGCTATTTTGATCTGAAACAGTTCTGGAATAACCTTAACTACATTAGCCGTATCTCCATTGAAGACGTTTATGATCATCGGCCCGATGAGGAAAGCAAATTCCTAAATTTGGTCTTTGACTGTATTGCACAACGACTAAGGAGAGAGCGGATGGAGGATGATCACTTCAGGTTCCAATCTCTCATCAGCACAGCTGTGCGTGACCAAATACCTGATCATTTCTTCGAATCCACGAATTTTGAAGAATGTGTTATAGTTTGGCACATAGCCACAGATGTGTGCTACAGACAGTGGCGGAGGCAGGAATTCTATCAAGGGGGTTCAAATAAAAAAAATGTTGTTGCTTGGAATCGAACCCGTGACCCAAAGCTCACATAGGCTGAATCCTGACCCCCATAAACCACTGAGCTACTCCTTTGACATATGCTCAGGGGGTTCAATTTTAAATATATACACGTACACAAAAAAATTGATCGTATATATATAGTGTAATTTTTTTTCCGAGGGGGGTCGGATGACCCCCCTGGACACACTATAGATCCGCCCCTGCAAATAATAGTGTAATGACCTGATTCCATCGTTATGGAAAAGCCAACAGGGAAAAATTTTGGGCAGGAAAATAATTTTGGTAGTAACTTGAGAATTCATTGCCTAGTCCAGTTTTGGACGAAATTGGAGTCAGTGAGGTCTAGGGAAATCTGGTAATACTCCTTTGACATATGCTCAGGGGGTTCAATTTTAAATATATACACGTACACAAAAAAATTGATCGTATATATATAGTGTAATTTTTTTTCCGAGGGGGGTCGGATGACCCCCCTGGACACACTATAGATCCGCCCCTGGCTACAGATTATACTATCACTTGGAGGAATCACATTATCTTCAGTATGCCAAGTACCTCTCAGACTACATGTTATATCTACTGCAAGTTCATCCAGAGATACTGTCACCACTGACAAGAAAAGAGCTACAAAGCTATCGAAATGTATGTGATGCAACTAACAGATTCTATGATGACATGGAGATTCAGAGTCAGGTAATAACAGAGATCGTCGATAAGATTGAACCTGCATACACTAATTTACGTGGAATCCCAAGAGAGAGACGACCAAGGAACATCTCTAACTTGTTACTAGATGGCTATGAACTAGCTCGAGAGATGGGTTGTCAACGTATCCGGGAGTATAGGCTAGGTGAAGAAGTGGATAACACATGGAGAGTGTTATTTGAAGTCTGGGTGGATATACTATCCTATTCTGCAGTTTTTGTTCCAGGGAAACAACACGCTGAGCATCTTGTCACCGGTGGAGAGTTCATAACATATATCTGGTTGCTTATCGTCTACTTGATCGAGAACTGATCGATTATTATTGTTTTTTTTCTTTGTTTAAACTTTCTCTCCAGTTCAATGTATACTTGGATGCTATGTACTGGGGGAAAATGCTGTTACAAAATAAGATGTTGAATGATCAAGAAAGCTTTTCATTTTATTCACTGAACCAAAGGAGTATCTATTTACAGTACAAAGAGCGCTAGTAATAGGAAATATACAATAAAGAAAGACAGAAATAAAAACAGAATAAAGCATTGTTGTATTAAGGTATTTTGATGATTCAACAAACTATGGTAAGTTAGGATCTGGTAAAGGAACCTGATCCTTCGAGCTGAGGCCGCACACGACAACTCAATGGGGTGCAGCTGTAAAGCTGGCAAAACTGCAGAGGTAGGTGGGCACGACAACAGAGGGTTCTCGGCCAATGGCGAGCTACTAGGTTGTTTTGTCTTTCTTATTCATTAGACACATTGACAAGAAACAACCTAGCGTTTGCAAATGAAAGATATTCAAAGCAAAAGTCATTCTCTCAAGGAAGAACTATTCTCAAGCAGCAAGGGACCTGATCAAGTCATCTACAGAAGTTTTAAATTATCATTTGAGTTTAGTGTCTTGTGTTTGTATGTTATAAGATCTATTCCTACGTTATAAAGGAAATCAGATCATGTCAGTTTAAATTGCAAAAGAGTTGAGTTCATCGCTATAGTTAGGCAAGTTGTGTTTGTTTGAAGTCTATGTTAACTAGAGGTAGTTGATATAATAGGCAATAGGGTTATTGCTTTGTATCTTGTAATAGAGGTGTTATAAGGTGTGTAGGATTAAGAGTTAATCCTTTGTGATCACAAGAGTCTGTACTCGCAAGTTGCTTAAAGTTAGTGGAGTTTTGAGAAATCTTGTGTGACAGGCCGTGGTTTTTTCCTCCCTTGAGCAAGAAGGTTTCCACGTAAACTTCCTTGTTCAATTTGGAGATAAGATATGGATGTGCTCGTATCATCGCCTTTGTAAACTGTTACAGAATCACTACAGA

>PGSC0003DMG400029315

AAATTAGTAAGATACAATGATTTTGATATAATTAAAATTATCCTTCATCAAATCTCAAACCATTCTTCAAATTAATAAATATTAATTTATCGATTAAATAATATCTCTATAAAATAATAAATCTTCATGGTCCTAGCTATATTAATTTATAGAGGTTTTACTGTATATATAAAAAAAATTACAATAAATTCAATATTTAATAGTTAATAATTTATGTATTGTGATCTCTACATAACTAAATAATACTTGCATAACTAATACATGCATAACTAAACCCTGCATAATTAATACATGCATAACTAAAACCTACATAAATAAACCCTGCATAACTAAACTATGCATAATTAATACCTACATAACTAATACTTGCATAACTAAATTTTGCATAACTAATACCTACATAACTAAACCCTGCATAGCTAATACCTGCATAACTCTAATCAGTAACCAAACGGCCCCTAAATGTATTAGTTAGTATCTTAAATATTACAAGAAGATACTTCAAAATGCAAGGTAAATATTCATGGTCAAGAATTTTTCAATGAGCTATTAATCAATATCCTGTAAAAAGGAATGTCAAACCATAAATAATTTGAACTTTATAGGTTATGGATTCTAGGACAACGAACTCAACTGCTCGTTTAGGTTTCTAAATTCAACTTTTGTACATATTTAATAAATTTCTTCGCGCATATACATGATTTCACCCAAGCTAGTGGATTTTACCGAACCCATATCCTGAAGACTACCTCCATCCCTTTGTGAGTCCGAGTCAACTTTATATAATAGTCAAGTCTTGTTATGTTCCTAGTTTGTGGTCCCCCCNNNNNNNCGCCCCCCCCCCCCCCCCCACTAAGTTGACTCAACAAGTCTTCTAATGGTACTTCCAAGTTGTTTCCTTTTTCTTTCTTTCTTTTCCAAATACCTGTGATTATAAGTAGTAGTAACATTTTCATCCATTGCAGTAACCAGCTGCCGGGAAGCAATTTATTGATAAACCATGCTTTTACTAGGTACTGTTTGTTTCTGCTTATTGTTCTTGCTTCATCTAATTTTGTTTCAAAGTCAGTGTTGAAAATGTTAATTTAAAGTTTTAAGTGTCTGCTACTCAATCTAGGTGTGTTGTTAGAGTCATTCGAGAATGTTTAGTAATTATGGTTCGTGAAATTGAACGTTTAATGGTTAATATTGTTGCTTTATATATATGGGAGGATAGCGTGTTGTTTTGTAAGAGTGGGGTTTTTCTAATGATATAGGTTTGCTTTATATTTATTTCCCTTTTCCATACTCATTGTGCCTTATATTATGAAGATCCCACCTTATAGTTGTTAGAGCGGATTAGAGTCTCATATTGATTGGGGAATGAACTGATTTTTTGTTTATATGGACTTAGGCAGCTCTCCCCCTCATGAGCTAGTTTTTGTGGTTGAGTTAGGCTCGGATGTCATAACTTTACATGATATATCAGAGCCAGATCTATTTCCGATTGAGCATGGTCTAGTCGGGTCTGGGCGTGAAGGGGTGGGGGGGGGGGGTGTTAAAGTGGGTTAGAGTCCCATGTAGATTGGGAAATGGGCTGATGGTTTGTTTCTATGGATTTGGGAAATGTTCCCTTCATGAACTAGCTTTTGGGGTTGAGTTAGGCTCGGGTGTCATGTTCTTACAATATTCTGACTTAGTTTTCTAATTTTTATCTTTGTTTTGGCAGCTGCAAGTGTAATATTTGTTAATGCTGAGAGAAGAAGGCTAATTGAAGTTATTCCGGACAGCTTGAAGAAAGCATGGAAAGATTGGGAACTAGAAGTGCTGATTCTAGGAAGCCTTATCCTTCAGATTGTGCTTATTCTTTTTGGCAAACACAGGCAGCGTGTGGCGAAATTATGGATCAGAATGACTCTATGGACATCTTACTTACTAGCAGATTGGATTGCTATTGTTGCATTAGGTATCATCTCTCAGAATACGTTGGATAAATGCAAACAAACAAGTGTTGATGACAAGTTAAAGGATGAATTGATGTCGTTCTGGGCGCCATTTATGCTGCTGCATCTTGGCGGTCCTGATACTATCACGGCTTATTCATTGGAGGATAACGAGCTGTGGTTAAGGCATTTAGTCGGTCTGATAATCCAGAGTGGACTAACCTTCTATATCTTGCTTGTGTCCTTGCCAGGCTCTTCTTGGCTTCCATTTCTAAGCTTATTCATCTTCATATCTGGTCTTATCAAGTTCTCTGAGCGGACATGTGCTCTCCGTTCAGCAAAAATCGAGAATCTCAGGGACGCAATGCTGACATCCCCTGATCCTGGACCAAATTATGCTAAATTCATGGAGGAATATACTTTGAAGAAAGCTGAGGGCTTCTACGTGATGGCGGATGAGGTTAAAGAAATCTCACTTCCAATTGATCATTCTTACGATACTGGTAAAGATAAATTGCTGCTGATATCTGAAGCTTTCGATCAGTTCCAGACTTTTAAACGTCTATTTGTGGATCTCATCCTTAGCTTCCAGGACAGAGACAACAGTCGATTTTACTTCAAGCAACTTCCTCCAAAAGAAGCTTTTGATGTGATTGAGGCAGAGTTAGGATTTGCATACGATTCCTTTTATACCAAAGCACCCGCCATTTTTACTCCTCTAGGCTGCATTCTTCGTGTGGTTACTTTTTCTTGCATTTTTTTTAGCTTGATTTCATTTTCTTTATGTAAGGAAAGATCGAAATACCACATACTTGACTTGATTCTCACTTACCTGCTGCTGGTGGTGGCCTTTCTCCTGGAAATATATGCATTGATCGTTTTGCTGAACTCAGACTGGACAAAGAATTGGCTGAGCAAAGGGAAACAGGACGGAAAGTTTTACCAGTCGTTCCTCCTCAACAAGCTGTGTCTGCGAAGATCAAATAAAATGAGGTGGTCCAATTGCACACCCCAATACAATTTGTTGAGCTACTGTGTTGAGTTCAAGCCCCCCCGGTGCTACAGGTTCCAAAAACTCTTTCTAATCAATGAGCTCTTGGAAAAACACAAGTACAAGAAGGATGAACTAGTCACCCCGGAGTTAAAAGAGCTGATCTTTAACCATTTCCAAAAGTTTGCCGGAGAAAGTGATAACGAGCCTGATCATCCAGTCTTATGCACAAGTAGAGGCACTAAAGCACTTACCAAAAATGACTGTTCTTCCCTAGTTTGGTCTACCGAGGTAGAATTTGATCAAAGCTTCCTTATTTGGCACATTGCTACTGATCTATGCTATTACACTGATGATGGAAGTACTCCTCGTTCCATCAAATCCAAGCAAATTAGCAAGCAGTTATCAGATTATATGTTGTATCTTTTAGTAGTATGCCCCTTTATGCTGCCAATAGGACTTGGGATGATCAGATTTAGAGATACATGTGCTGAAGCCAAAGAGTTTTTTACAGAGAGGAAAGTCGGAAAAGACTTAGCTATAGCTAGTCGCAAGTTGCTAACAGTGAATACTGAAGTTAGACCTGCAAAAGTGAAAGGGGATAGGAGCAAGAGCGTGTTGTTTGACGCGTGCATACTTGCCAAATCATTGAAAGAAAAGGGTACTCAGAGAAAATGGGAAATCATCAGTGAAGTATGGGTGGAAATGCTTGCTTATGCTGCTACACATTGCAGAGGGAACCATCACGCTCAACAACTACGTAAAGGAGGAGAGCTTCTTACTCATGTCTGGCTTCTCATGGCACATCTTGGCATCACAGAGCAATTCCAGATAAACAGAGGTCATGCTAGGGCCAAGCTCATTGTCAAGTAATAAACTCGGATTCTCGTGCTGATCAAGACTTAGTAGGTAAATAAATGTGTGTTCTCTCTAATCGTTTTAAGTTTTTTATCATGATATTATAGTAGAGACAGGCTCAGAAAACAAAGTTAACTTTTAGCATGTACCTGTACTTGTTGTAACTAGTTTCTGGTAACGTACATCGCACGTTTGTTCTTTATTATTATGATTAATAAATTTTTTATCTATAATAGTTTTGAGTTATAAAATGGAAATATTGATGGAGTCAGGAGTGATTTTTTTTTTTTGAGAATTGGTAACATGTCAAGAGTGAAAATTGTTAACCACTAACTAATAATCTCGTTTACAGCTTATATGTTATATTCAAATTCAATTTCTATTATATGTATTTCTCTTTAAGATTGTTATTCCTTTTTCATATTTAGAAGGGATAAGGGTCTGAAAAATACCTCAACTTTGATTGAATTTGTTGTTGCGATACTAAACTTTCATGAGGACCTATTACCTCCCTAGACTATTTAATACCGTATTTTAAACATATATATTTGTCCACGTGGACATAAAAAATAATGCAAAATTATAAATAGTAATGTGTCCACGTGAGCACATATATACCTTTAAAATACACTATTAAATAGTTCAGGGGGTAAAAANNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNNCTAGAGAGGTAATAAGTCCTCATGAAAGTTTAGTATCGCAACAACAAATTCGACCAAAGTTAGGATATTTTTCAGACCCTTATCCCTATATAGAAAGATAAATTTTAATGTTGTTCATATTTGATTTTTAAGTACTCATTCATATTAAATTAAATAACCTCATTTGTTAGAATATGTCTTCTTCAGAGATTTTCTCGATTTCTTTTGCTCGATGTTGTTGAGGGAGAAGCATTAGTTATCCTTTTTAACTCGAAATCTAGGAGACTAAAAGCAAAATATAATTTCAATAATTCATTGTCAAACTAAAGCTATC

Example of the FASTA file.Example of the GFF file.

PGSC0003DMG400023803

JGI v4.03

gene

1001

4287

.

+

.

ID=PGSC0003DMG400023803;id=PGSC0003DMG400023803.v4.03;pacid=37440166;uniprot=J9ULI1;Name=PGSC0003DMG400023803;gene_id=PGSC0003DMG400023803

PGSC0003DMG400023803

JGI v4.03

mRNA

1001

4287

.

+

.

ID=PGSC0003DMT400061170;Parent=PGSC0003DMG400023803;Name=PGSC0003DMG400023803;gene_id=PGSC0003DMG400023803

PGSC0003DMG400023803

JGI v4.03

exon

1001

1120

.

+

.

ID=PGSC0003DMT400061170:exon:1;Parent=PGSC0003DMT400061170;Name=PGSC0003DMG400023803;gene_id=PGSC0003DMG400023803

PGSC0003DMG400023803

JGI v4.03

CDS

1001

1120

.

+

0

ID=PGSC0003DMT400061170:CDS;Parent=PGSC0003DMT400061170;Name=PGSC0003DMG400023803;gene_id=PGSC0003DMG400023803

PGSC0003DMG400023803

JGI v4.03

exon

1329

1403

.

+

.

ID=PGSC0003DMT400061170:exon:2;Parent=PGSC0003DMT400061170;Name=PGSC0003DMG400023803;gene_id=PGSC0003DMG400023803

PGSC0003DMG400023803

JGI v4.03

CDS

1329

1403

.

+

0

ID=PGSC0003DMT400061170:CDS;Parent=PGSC0003DMT400061170;Name=PGSC0003DMG400023803;gene_id=PGSC0003DMG400023803

PGSC0003DMG400023803

JGI v4.03

exon

1534

1635

.

+

.

ID=PGSC0003DMT400061170:exon:3;Parent=PGSC0003DMT400061170;Name=PGSC0003DMG400023803;gene_id=PGSC0003DMG400023803

PGSC0003DMG400023803

JGI v4.03

CDS

1534

1635

.

+

0

ID=PGSC0003DMT400061170:CDS;Parent=PGSC0003DMT400061170;Name=PGSC0003DMG400023803;gene_id=PGSC0003DMG400023803

PGSC0003DMG400023803

JGI v4.03

exon

1754

1807

.

+

.

ID=PGSC0003DMT400061170:exon:4;Parent=PGSC0003DMT400061170;Name=PGSC0003DMG400023803;gene_id=PGSC0003DMG400023803

PGSC0003DMG400023803

JGI v4.03

CDS

1754

1807

.

+

0

ID=PGSC0003DMT400061170:CDS;Parent=PGSC0003DMT400061170;Name=PGSC0003DMG400023803;gene_id=PGSC0003DMG400023803

PGSC0003DMG400023803

JGI v4.03

exon

1921

2013

.

+

.

ID=PGSC0003DMT400061170:exon:5;Parent=PGSC0003DMT400061170;Name=PGSC0003DMG400023803;gene_id=PGSC0003DMG400023803

PGSC0003DMG400023803

JGI v4.03

CDS

1921

2013

.

+

0

ID=PGSC0003DMT400061170:CDS;Parent=PGSC0003DMT400061170;Name=PGSC0003DMG400023803;gene_id=PGSC0003DMG400023803

PGSC0003DMG400023803

JGI v4.03

exon

2102

2194

.

+

.

ID=PGSC0003DMT400061170:exon:6;Parent=PGSC0003DMT400061170;Name=PGSC0003DMG400023803;gene_id=PGSC0003DMG400023803

PGSC0003DMG400023803

JGI v4.03

CDS

2102

2194

.

+

0

ID=PGSC0003DMT400061170:CDS;Parent=PGSC0003DMT400061170;Name=PGSC0003DMG400023803;gene_id=PGSC0003DMG400023803

PGSC0003DMG400023803

JGI v4.03

exon

2581

2685

.

+

.

ID=PGSC0003DMT400061170:exon:7;Parent=PGSC0003DMT400061170;Name=PGSC0003DMG400023803;gene_id=PGSC0003DMG400023803

PGSC0003DMG400023803

JGI v4.03

CDS

2581

2685

.

+

0

ID=PGSC0003DMT400061170:CDS;Parent=PGSC0003DMT400061170;Name=PGSC0003DMG400023803;gene_id=PGSC0003DMG400023803

PGSC0003DMG400023803

JGI v4.03

exon

2771

2869

.

+

.

ID=PGSC0003DMT400061170:exon:8;Parent=PGSC0003DMT400061170;Name=PGSC0003DMG400023803;gene_id=PGSC0003DMG400023803

PGSC0003DMG400023803

JGI v4.03

CDS

2771

2869

.

+

0

ID=PGSC0003DMT400061170:CDS;Parent=PGSC0003DMT400061170;Name=PGSC0003DMG400023803;gene_id=PGSC0003DMG400023803

PGSC0003DMG400023803

JGI v4.03

exon

3946

4287

.

+

.

ID=PGSC0003DMT400061170:exon:9;Parent=PGSC0003DMT400061170;Name=PGSC0003DMG400023803;gene_id=PGSC0003DMG400023803

PGSC0003DMG400023803

JGI v4.03

CDS

3946

4287

.

+

0

ID=PGSC0003DMT400061170:CDS;Parent=PGSC0003DMT400061170;Name=PGSC0003DMG400023803;gene_id=PGSC0003DMG400023803

PGSC0003DMG400025895

JGI v4.03

gene

1001

3879

.

+

.

ID=PGSC0003DMG400025895;pacid=37436441;id=PGSC0003DMG400025895.v4.03;uniprot=M1CFW5;Name=PGSC0003DMG400025895;gene_id=PGSC0003DMG400025895

PGSC0003DMG400025895

JGI v4.03

mRNA

1001

3879

.

+

.

ID=PGSC0003DMT400066617;Parent=PGSC0003DMG400025895;Name=PGSC0003DMG400025895;gene_id=PGSC0003DMG400025895

PGSC0003DMG400025895

JGI v4.03

exon

3589

3879

.

+

.

ID=PGSC0003DMT400066617:exon:1;Parent=PGSC0003DMT400066617;Name=PGSC0003DMG400025895;gene_id=PGSC0003DMG400025895

PGSC0003DMG400025895

JGI v4.03

CDS

3589

3879

.

+

0

ID=PGSC0003DMT400066617:CDS;Parent=PGSC0003DMT400066617;Name=PGSC0003DMG400025895;gene_id=PGSC0003DMG400025895

PGSC0003DMG400025895

JGI v4.03

exon

3221

3319

.

+

.

ID=PGSC0003DMT400066617:exon:2;Parent=PGSC0003DMT400066617;Name=PGSC0003DMG400025895;gene_id=PGSC0003DMG400025895

PGSC0003DMG400025895

JGI v4.03

CDS

3221

3319

.

+

0

ID=PGSC0003DMT400066617:CDS;Parent=PGSC0003DMT400066617;Name=PGSC0003DMG400025895;gene_id=PGSC0003DMG400025895

PGSC0003DMG400025895

JGI v4.03

exon

3038

3142

.

+

.

ID=PGSC0003DMT400066617:exon:3;Parent=PGSC0003DMT400066617;Name=PGSC0003DMG400025895;gene_id=PGSC0003DMG400025895

PGSC0003DMG400025895

JGI v4.03

CDS

3038

3142

.

+

0

ID=PGSC0003DMT400066617:CDS;Parent=PGSC0003DMT400066617;Name=PGSC0003DMG400025895;gene_id=PGSC0003DMG400025895

PGSC0003DMG400025895

JGI v4.03

exon

2140

2232

.

+

.

ID=PGSC0003DMT400066617:exon:4;Parent=PGSC0003DMT400066617;Name=PGSC0003DMG400025895;gene_id=PGSC0003DMG400025895

PGSC0003DMG400025895

JGI v4.03

CDS

2140

2232

.

+

0

ID=PGSC0003DMT400066617:CDS;Parent=PGSC0003DMT400066617;Name=PGSC0003DMG400025895;gene_id=PGSC0003DMG400025895

PGSC0003DMG400025895

JGI v4.03

exon

1756

1848

.

+

.

ID=PGSC0003DMT400066617:exon:5;Parent=PGSC0003DMT400066617;Name=PGSC0003DMG400025895;gene_id=PGSC0003DMG400025895

PGSC0003DMG400025895

JGI v4.03

CDS

1756

1848

.

+

0

ID=PGSC0003DMT400066617:CDS;Parent=PGSC0003DMT400066617;Name=PGSC0003DMG400025895;gene_id=PGSC0003DMG400025895

PGSC0003DMG400025895

JGI v4.03

exon

1607

1660

.

+

.

ID=PGSC0003DMT400066617:exon:6;Parent=PGSC0003DMT400066617;Name=PGSC0003DMG400025895;gene_id=PGSC0003DMG400025895

PGSC0003DMG400025895

JGI v4.03

CDS

1607

1660

.

+

0

ID=PGSC0003DMT400066617:CDS;Parent=PGSC0003DMT400066617;Name=PGSC0003DMG400025895;gene_id=PGSC0003DMG400025895

PGSC0003DMG400025895

JGI v4.03

exon

1425

1526

.

+

.

ID=PGSC0003DMT400066617:exon:7;Parent=PGSC0003DMT400066617;Name=PGSC0003DMG400025895;gene_id=PGSC0003DMG400025895

PGSC0003DMG400025895

JGI v4.03

CDS

1425

1526

.

+

0

ID=PGSC0003DMT400066617:CDS;Parent=PGSC0003DMT400066617;Name=PGSC0003DMG400025895;gene_id=PGSC0003DMG400025895

PGSC0003DMG400025895

JGI v4.03

exon

1264

1338

.

+

.

ID=PGSC0003DMT400066617:exon:8;Parent=PGSC0003DMT400066617;Name=PGSC0003DMG400025895;gene_id=PGSC0003DMG400025895

PGSC0003DMG400025895

JGI v4.03

CDS

1264

1338

.

+

0

ID=PGSC0003DMT400066617:CDS;Parent=PGSC0003DMT400066617;Name=PGSC0003DMG400025895;gene_id=PGSC0003DMG400025895

PGSC0003DMG400025895

JGI v4.03

exon

1001

1177

.

+

.

ID=PGSC0003DMT400066617:exon:9;Parent=PGSC0003DMT400066617;Name=PGSC0003DMG400025895;gene_id=PGSC0003DMG400025895

PGSC0003DMG400025895

JGI v4.03

CDS

1001

1177

.

+

0

ID=PGSC0003DMT400066617:CDS;Parent=PGSC0003DMT400066617;Name=PGSC0003DMG400025895;gene_id=PGSC0003DMG400025895

PGSC0003DMG400023636

JGI v4.03

gene

1001

4351

.

+

.

ID=PGSC0003DMG400023636;id=PGSC0003DMG400023636.v4.03;pacid=37472637;uniprot=M1C6E4;Name=PGSC0003DMG400023636;gene_id=PGSC0003DMG400023636

PGSC0003DMG400023636

JGI v4.03

mRNA

1001

4351

.

+

.

ID=PGSC0003DMT400060762;Parent=PGSC0003DMG400023636;Name=PGSC0003DMG400023636;gene_id=PGSC0003DMG400023636

PGSC0003DMG400023636

JGI v4.03

exon

1001

1120

.

+

.

ID=PGSC0003DMT400060762:exon:1;Parent=PGSC0003DMT400060762;Name=PGSC0003DMG400023636;gene_id=PGSC0003DMG400023636

PGSC0003DMG400023636

JGI v4.03

CDS

1001

1120

.

+

0

ID=PGSC0003DMT400060762:CDS;Parent=PGSC0003DMT400060762;Name=PGSC0003DMG400023636;gene_id=PGSC0003DMG400023636

PGSC0003DMG400023636

JGI v4.03

exon

1838

1912

.

+

.

ID=PGSC0003DMT400060762:exon:2;Parent=PGSC0003DMT400060762;Name=PGSC0003DMG400023636;gene_id=PGSC0003DMG400023636

PGSC0003DMG400023636

JGI v4.03

CDS

1838

1912

.

+

0

ID=PGSC0003DMT400060762:CDS;Parent=PGSC0003DMT400060762;Name=PGSC0003DMG400023636;gene_id=PGSC0003DMG400023636

PGSC0003DMG400023636

JGI v4.03

exon

2673

2774

.

+

.

ID=PGSC0003DMT400060762:exon:3;Parent=PGSC0003DMT400060762;Name=PGSC0003DMG400023636;gene_id=PGSC0003DMG400023636

PGSC0003DMG400023636

JGI v4.03

CDS

2673

2774

.

+

0

ID=PGSC0003DMT400060762:CDS;Parent=PGSC0003DMT400060762;Name=PGSC0003DMG400023636;gene_id=PGSC0003DMG400023636

PGSC0003DMG400023636

JGI v4.03

exon

2996

3049

.

+

.

ID=PGSC0003DMT400060762:exon:4;Parent=PGSC0003DMT400060762;Name=PGSC0003DMG400023636;gene_id=PGSC0003DMG400023636

PGSC0003DMG400023636

JGI v4.03

CDS

2996

3049

.

+

0

ID=PGSC0003DMT400060762:CDS;Parent=PGSC0003DMT400060762;Name=PGSC0003DMG400023636;gene_id=PGSC0003DMG400023636

PGSC0003DMG400023636

JGI v4.03

exon

3159

3251

.

+

.

ID=PGSC0003DMT400060762:exon:5;Parent=PGSC0003DMT400060762;Name=PGSC0003DMG400023636;gene_id=PGSC0003DMG400023636

PGSC0003DMG400023636

JGI v4.03

CDS

3159

3251

.

+

0

ID=PGSC0003DMT400060762:CDS;Parent=PGSC0003DMT400060762;Name=PGSC0003DMG400023636;gene_id=PGSC0003DMG400023636

PGSC0003DMG400023636

JGI v4.03

exon

3338

3430

.

+

.

ID=PGSC0003DMT400060762:exon:6;Parent=PGSC0003DMT400060762;Name=PGSC0003DMG400023636;gene_id=PGSC0003DMG400023636

PGSC0003DMG400023636

JGI v4.03

CDS

3338

3430

.

+

0

ID=PGSC0003DMT400060762:CDS;Parent=PGSC0003DMT400060762;Name=PGSC0003DMG400023636;gene_id=PGSC0003DMG400023636

PGSC0003DMG400023636

JGI v4.03

exon

3525

3629

.

+

.

ID=PGSC0003DMT400060762:exon:7;Parent=PGSC0003DMT400060762;Name=PGSC0003DMG400023636;gene_id=PGSC0003DMG400023636

PGSC0003DMG400023636

JGI v4.03

CDS

3525

3629

.

+

0

ID=PGSC0003DMT400060762:CDS;Parent=PGSC0003DMT400060762;Name=PGSC0003DMG400023636;gene_id=PGSC0003DMG400023636

PGSC0003DMG400023636

JGI v4.03

exon

3880

3978

.

+

.

ID=PGSC0003DMT400060762:exon:8;Parent=PGSC0003DMT400060762;Name=PGSC0003DMG400023636;gene_id=PGSC0003DMG400023636

PGSC0003DMG400023636

JGI v4.03

CDS

3880

3978

.

+

0

ID=PGSC0003DMT400060762:CDS;Parent=PGSC0003DMT400060762;Name=PGSC0003DMG400023636;gene_id=PGSC0003DMG400023636

PGSC0003DMG400023636

JGI v4.03

exon

4058

4351

.

+

.

ID=PGSC0003DMT400060762:exon:9;Parent=PGSC0003DMT400060762;Name=PGSC0003DMG400023636;gene_id=PGSC0003DMG400023636

PGSC0003DMG400023636

JGI v4.03

CDS

4058

4351

.

+

0

ID=PGSC0003DMT400060762:CDS;Parent=PGSC0003DMT400060762;Name=PGSC0003DMG400023636;gene_id=PGSC0003DMG400023636

PGSC0003DMG400030830

JGI v4.03

gene

1001

3804

.

+

.

ID=PGSC0003DMG400030830;uniprot=M1D1F6;pacid=37475155;id=PGSC0003DMG400030830.v4.03;Name=PGSC0003DMG400030830;gene_id=PGSC0003DMG400030830

PGSC0003DMG400030830

JGI v4.03

mRNA

1001

3804

.

+

.

ID=PGSC0003DMT400079214;Parent=PGSC0003DMG400030830;Name=PGSC0003DMG400030830;gene_id=PGSC0003DMG400030830

PGSC0003DMG400030830

JGI v4.03

exon

3538

3804

.

+

.

ID=PGSC0003DMT400079214:exon:1;Parent=PGSC0003DMT400079214;Name=PGSC0003DMG400030830;gene_id=PGSC0003DMG400030830

PGSC0003DMG400030830

JGI v4.03

CDS

3538

3804

.

+

0

ID=PGSC0003DMT400079214:CDS;Parent=PGSC0003DMT400079214;Name=PGSC0003DMG400030830;gene_id=PGSC0003DMG400030830

PGSC0003DMG400030830

JGI v4.03

exon

3200

3298

.

+

.

ID=PGSC0003DMT400079214:exon:2;Parent=PGSC0003DMT400079214;Name=PGSC0003DMG400030830;gene_id=PGSC0003DMG400030830

PGSC0003DMG400030830

JGI v4.03

CDS

3200

3298

.

+

0

ID=PGSC0003DMT400079214:CDS;Parent=PGSC0003DMT400079214;Name=PGSC0003DMG400030830;gene_id=PGSC0003DMG400030830

PGSC0003DMG400030830

JGI v4.03

exon

2939

3043

.

+

.

ID=PGSC0003DMT400079214:exon:3;Parent=PGSC0003DMT400079214;Name=PGSC0003DMG400030830;gene_id=PGSC0003DMG400030830

PGSC0003DMG400030830

JGI v4.03

CDS

2939

3043

.

+

0

ID=PGSC0003DMT400079214:CDS;Parent=PGSC0003DMT400079214;Name=PGSC0003DMG400030830;gene_id=PGSC0003DMG400030830

PGSC0003DMG400030830

JGI v4.03

exon

2769

2861

.

+

.

ID=PGSC0003DMT400079214:exon:4;Parent=PGSC0003DMT400079214;Name=PGSC0003DMG400030830;gene_id=PGSC0003DMG400030830

PGSC0003DMG400030830

JGI v4.03

CDS

2769

2861

.

+

0

ID=PGSC0003DMT400079214:CDS;Parent=PGSC0003DMT400079214;Name=PGSC0003DMG400030830;gene_id=PGSC0003DMG400030830

PGSC0003DMG400030830

JGI v4.03

exon

2591

2683

.

+

.

ID=PGSC0003DMT400079214:exon:5;Parent=PGSC0003DMT400079214;Name=PGSC0003DMG400030830;gene_id=PGSC0003DMG400030830

PGSC0003DMG400030830

JGI v4.03

CDS

2591

2683

.

+

0

ID=PGSC0003DMT400079214:CDS;Parent=PGSC0003DMT400079214;Name=PGSC0003DMG400030830;gene_id=PGSC0003DMG400030830

PGSC0003DMG400030830

JGI v4.03

exon

2454

2507

.

+

.

ID=PGSC0003DMT400079214:exon:6;Parent=PGSC0003DMT400079214;Name=PGSC0003DMG400030830;gene_id=PGSC0003DMG400030830

PGSC0003DMG400030830

JGI v4.03

CDS

2454

2507

.

+

0

ID=PGSC0003DMT400079214:CDS;Parent=PGSC0003DMT400079214;Name=PGSC0003DMG400030830;gene_id=PGSC0003DMG400030830

PGSC0003DMG400030830

JGI v4.03

exon

1908

2009

.

+

.

ID=PGSC0003DMT400079214:exon:7;Parent=PGSC0003DMT400079214;Name=PGSC0003DMG400030830;gene_id=PGSC0003DMG400030830

PGSC0003DMG400030830

JGI v4.03

CDS

1908

2009

.

+

0

ID=PGSC0003DMT400079214:CDS;Parent=PGSC0003DMT400079214;Name=PGSC0003DMG400030830;gene_id=PGSC0003DMG400030830

PGSC0003DMG400030830

JGI v4.03

exon

1214

1288

.

+

.

ID=PGSC0003DMT400079214:exon:8;Parent=PGSC0003DMT400079214;Name=PGSC0003DMG400030830;gene_id=PGSC0003DMG400030830

PGSC0003DMG400030830

JGI v4.03

CDS

1214

1288

.

+

0

ID=PGSC0003DMT400079214:CDS;Parent=PGSC0003DMT400079214;Name=PGSC0003DMG400030830;gene_id=PGSC0003DMG400030830

PGSC0003DMG400030830

JGI v4.03

exon

1001

1120

.

+

.

ID=PGSC0003DMT400079214:exon:9;Parent=PGSC0003DMT400079214;Name=PGSC0003DMG400030830;gene_id=PGSC0003DMG400030830

PGSC0003DMG400030830

JGI v4.03

CDS

1001

1120

.

+

0

ID=PGSC0003DMT400079214:CDS;Parent=PGSC0003DMT400079214;Name=PGSC0003DMG400030830;gene_id=PGSC0003DMG400030830

PGSC0003DMG400023441

JGI v4.03

gene

1001

3007

.

+

.

ID=PGSC0003DMG400023441;uniprot=M1C5H7;id=PGSC0003DMG400023441.v4.03;pacid=37443559;Name=PGSC0003DMG400023441;gene_id=PGSC0003DMG400023441

PGSC0003DMG400023441

JGI v4.03

mRNA

1001

3007

.

+

.

ID=PGSC0003DMT400060263;Parent=PGSC0003DMG400023441;Name=PGSC0003DMG400023441;gene_id=PGSC0003DMG400023441

PGSC0003DMG400023441

JGI v4.03

exon

1001

1120

.

+

.

ID=PGSC0003DMT400060263:exon:1;Parent=PGSC0003DMT400060263;Name=PGSC0003DMG400023441;gene_id=PGSC0003DMG400023441

PGSC0003DMG400023441

JGI v4.03

CDS

1001

1120

.

+

0

ID=PGSC0003DMT400060263:CDS;Parent=PGSC0003DMT400060263;Name=PGSC0003DMG400023441;gene_id=PGSC0003DMG400023441

PGSC0003DMG400023441

JGI v4.03

exon

1191

1421

.

+

.

ID=PGSC0003DMT400060263:exon:2;Parent=PGSC0003DMT400060263;Name=PGSC0003DMG400023441;gene_id=PGSC0003DMG400023441

PGSC0003DMG400023441

JGI v4.03

CDS

1191

1421

.

+

0

ID=PGSC0003DMT400060263:CDS;Parent=PGSC0003DMT400060263;Name=PGSC0003DMG400023441;gene_id=PGSC0003DMG400023441

PGSC0003DMG400023441

JGI v4.03

exon

1489

1581

.

+

.

ID=PGSC0003DMT400060263:exon:3;Parent=PGSC0003DMT400060263;Name=PGSC0003DMG400023441;gene_id=PGSC0003DMG400023441

PGSC0003DMG400023441

JGI v4.03

CDS

1489

1581

.

+

0

ID=PGSC0003DMT400060263:CDS;Parent=PGSC0003DMT400060263;Name=PGSC0003DMG400023441;gene_id=PGSC0003DMG400023441

PGSC0003DMG400023441

JGI v4.03

exon

1660